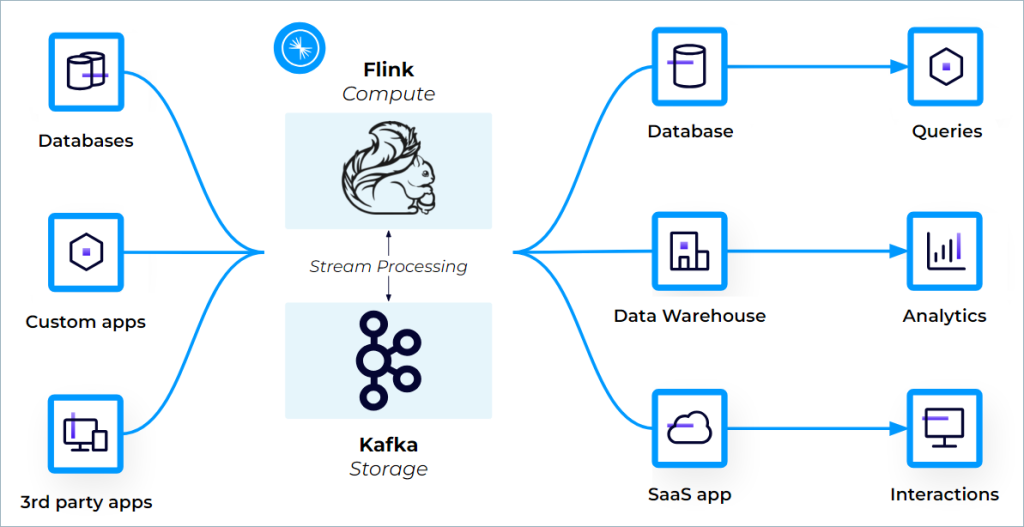

Apache Kafka, Apache Flink, and Apache Iceberg are among the most popular technologies in the data ecosystem. While Kafka enables you to move data around in real time, Flink allows you to process data according to your needs and Iceberg helps you access stored data in a structured and navigable manner so that it’s ripe for querying. All three are influencing how we build data systems.

New features are continuously being added to each of the three tools by their open source software communities, often in collaboration with one another. This means that best practices are constantly evolving. And that means data professionals must stay on top of wider industry trends like the recent increased focus on data governance.

Here are three trends I’ve been seeing lately around the Kafka, Flink, and Iceberg communities. Each presents a new way for engineers to manage data and meet application needs.

Re-envisioning microservices as Flink streaming applications

A common way to process data is to pull it out of Kafka using a microservice, process it using the same or potentially a different microservice, and then dump it back into Kafka or another queue. However, you can use Flink paired with Kafka to do all of the above, yielding a more reliable solution with lower latency, built-in fault tolerance, and event guarantees.

Confluent

Flink can be set to listen for data coming in, using a continuous push process rather than a discrete pull. In addition, using Flink instead of a microservice lets you leverage all of Flink’s built-in accuracies, such as exactly-once semantics. Flink has a two-phase commit protocol that enables developers to have exactly-once event processing guarantees end-to-end, which means that events entered into Kafka, for example, will be processed exactly once with Kafka and Flink. Note that the type of microservice that Flink best replaces is one related to data processing, updating the state of operational analytics.

Use Flink to quickly apply AI models to your data with SQL

Using Kafka and Flink together allows you to move and process data in real time and create high-quality, reusable data streams. These capabilities are essential for real-time, compound AI applications, which need reliable and readily available data for real-time decision-making. Think retrieval augmented generation (RAG) pattern, supplementing whatever model we use with right-in-time, high-quality context to improve the responses and mitigate hallucinations.

Using Flink SQL, you can write simple SQL statements to call a model of your choice (e.g., OpenAI, Azure OpenAI, or Amazon Bedrock). Practically speaking, you can configure any AI model with a REST API for Flink AI to use when processing your data stream. This enables you to use a custom, in-house AI model.

There are infinite use cases for AI, but it is commonly used for classification, clustering, and regression. You could use it, for example, for sentiment analysis of text, or scoring of sales leads.

Beyond its AI capabilities, Flink plays exceptionally well with everyone’s favorite streaming technology, Kafka—you may have heard of it. And I think that’s part of the reason why Flink is going to remain popular and remain the community’s choice for stream processing.

Leveraging community-built Apache Iceberg tools

Community contributions to Iceberg have been strong in recent months as more developers and organizations use this open data format to manage large analytical data sets—especially those stored in data lakes and data warehouses. For example, migration tools have been built to easily move Iceberg catalogs from one cloud provider to another. There are also tools to analyze the health of a given Iceberg instance.

Another contribution by the community is the Puffin format, a blob that lets you add statistics and additional metadata to data managed by an Iceberg table. Even the functionality that lets you send your Iceberg data back into Flink is a result of contributions by Flink and Iceberg community committers.

As more contributors and even vendors join the broader Iceberg community, data value—wherever it lives in your data architecture—will be more accessible than ever. When combined with Kafka/Flink applications and a shift-left approach to governance, Iceberg tables can help dramatically accelerate and scale how you build real-time analytics use cases.

Stay up-to-date with the latest in data streaming engineering

Staying current with the state of the art in Kafka, Flink and Iceberg means keeping an eye on the continuous streams of KIPs, FLIPs, and Iceberg PRs emanating from their respective communities. The dominance of the three technologies in their key functions, as well as the technological synergies among them, means keeping pace with trends and skills in this growing space will be well worthwhile.

Adi Polak is director of advocacy and developer experience engineering at Confluent.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.