Efficient retrieval-augmented generation (RAG) requires a good vector search of the documents you’re trying to query. While almost all the major databases now include some kind of embedding algorithms, vector storage, vector indexing, and vector search, standalone vector search and storage systems such as Qdrant (pronounced “quadrant”) have their place in the market, especially for production applications.

Qdrant claims to offer the best available performance and handling of vectors, as well as advanced one-stage filtering, storage optimization, quantization, horizontal and vertical scalability, payload-based sharding, and flexible deployment. It is also integrated with many popular RAG frameworks and large language models (LLMs), and it’s offered both as open-source software and cloud services.

Qdrant recently introduced BM42, a pure vector-based hybrid search model that delivers more accurate and efficient retrieval for modern RAG applications. The BM42 similarity ranking (relevancy) algorithm replaces 50-year-old text-based search engines for RAG and AI applications.

Qdrant competes directly with Weaviate, Elasticsearch, and Milvus (all open source); Pinecone (not open source); and Redis (mixed licensing). It competes indirectly with every database that supports vector search, which is most of them as of this writing, as well as the FAISS library (for searching multimedia documents) and Chroma (specialized for AI).

What is Qdrant?

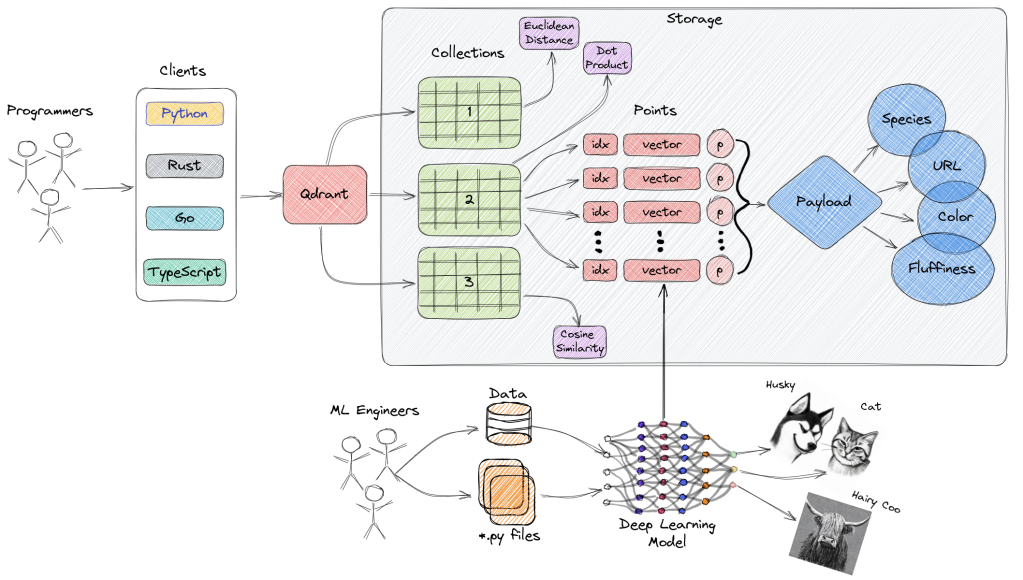

At its heart, Qdrant is a vector database. What does that mean? Essentially, vector databases store and query high-dimensional vectors efficiently. The vectors represent the features or attributes of the object that will be searched; creating the vectors is an embedding process. In addition to the vectors themselves, the vector database stores a unique ID per vector and a payload for the vector.

Why do we need vector databases? The short answer is “for similarity search, including RAG.” We’ll discuss this in more detail in the next section, which covers Qdrant use cases.

At an extremely high level, searching a vector database is a matter of finding some nearest neighbors to the embedding of the search query using some distance metric. There are lots of things you can do to make this search go quickly, starting with keeping the vectors in memory as much as possible, using an efficient approximate nearest neighbor (ANN) algorithm, using efficient indexes, and reducing the precision of the vectors (quantizing them) in order to be able to hold more of them in memory.

Vector databases often use specialized data structures and indexing techniques such as Hierarchical Navigable Small World (HNSW), which is used to implement Approximate Nearest Neighbors. Vector databases provide fast similarity and semantic search while allowing users to find vectors that are the closest to a given query vector based on some distance metric. The most commonly used distance metrics are Euclidean distance, cosine similarity, and dot product, all of which are supported by Qdrant.

Distance metrics are only hard to visualize because the vectors tend to have high dimensionality. Euclidean distance is the square root of the sum of the squares of the differences between the coordinates of two vectors. Dot product is the sum of the products of the matching coordinates of two vectors. Cosine similarity uses the cosine of the angle between two vectors; this is easiest to visualize in two or three dimensions, but no matter how high the dimensionality, the cosine ranges from -1 (completely dissimilar) to 1 (completely similar, the angle is 0).

As you can see in the diagram below, Qdrant’s architecture ties together client requests, embeddings of the stored vectors produced by a deep learning model, and server-side search. Data points are organized into Collections, which are named, searchable sets of points. The dimensionality and metric requirements for each point in a Collection have to match, and both of these are set when the Collection is created. If you need more flexibility, named vectors can be used to have multiple vectors in a single point, each of which can have their own dimensionality and metric requirements.

Qdrant

Qdrant has two options for storage, in-memory storage and Memmap storage. In-memory storage stores all vectors in RAM, and has the highest speed since disk access is required only for persistence. Memmap storage creates a virtual address space associated with the file on disk. Memmap storage often requires much less RAM than in-memory storage. HNSW indexes can also use Memmap storage if you want. Payloads can be in-memory or on-disk; the latter is best if the payloads are large. The disk storage uses RocksDB.

Because Qdrant allows for searching payload fields as well as ANN search on vectors, putting the payload on disk can introduce some latency. You can ameliorate this by creating a payload index for each field used in filtering conditions, to avoid most disk access.

The easiest way to install Qdrant locally is to docker pull qdrant/qdrant, then run it:

docker run -p 6333:6333 -p 6334:6334

-v $(pwd)/qdrant_storage:/qdrant/storage:z

qdrant/qdrant

Finally, you can code against Qdrant in Python, Go, Rust, JavaScript/TypeScript, .NET/C#, Java, Elixir, PHP, or Ruby. The first five languages are supported by Qdrant; the rest use contributed drivers.

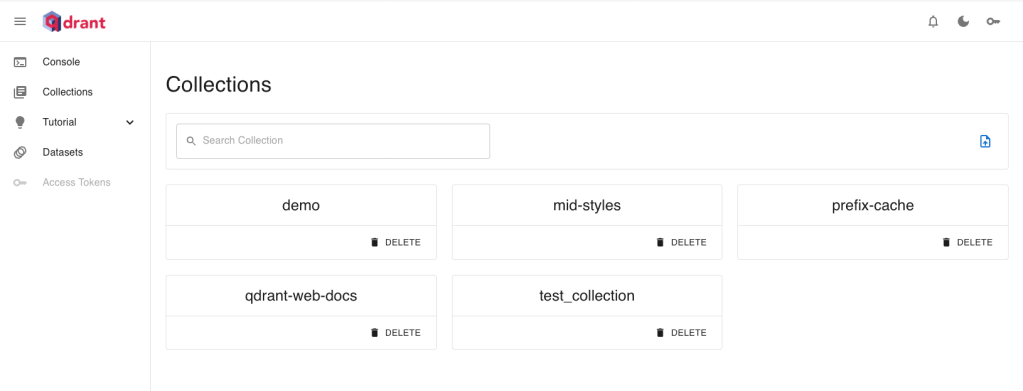

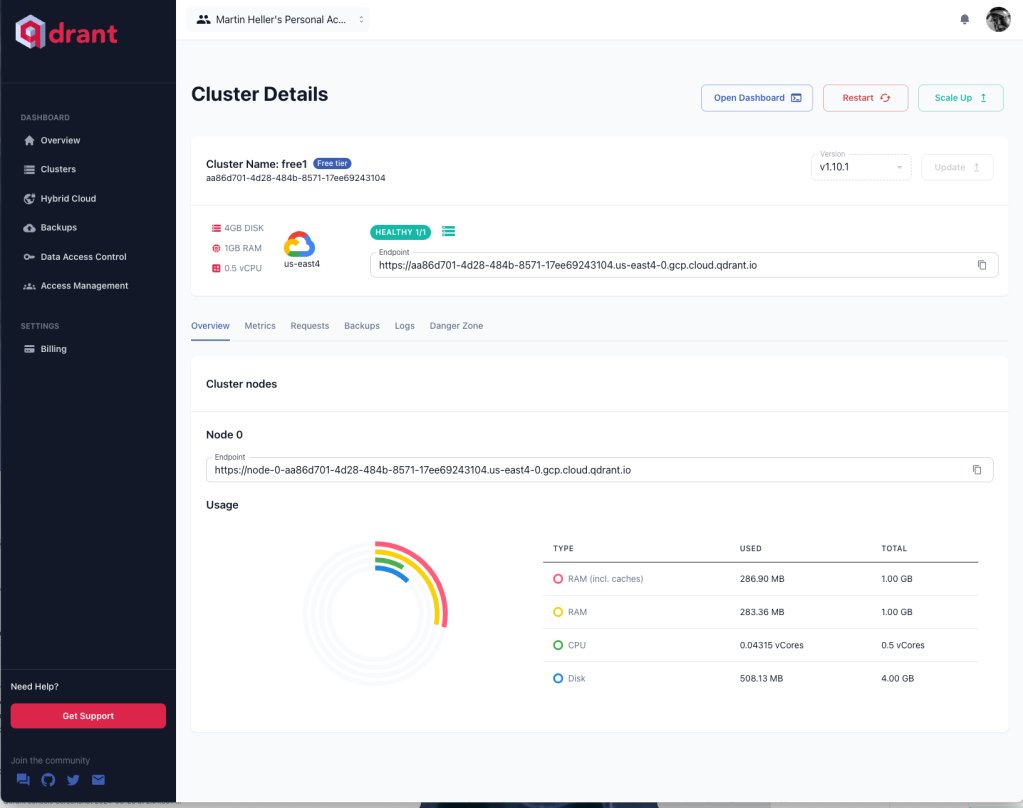

Using the Qdrant Cloud is even easier. You can start by logging in and creating a free cluster, which you can explore from the GUI and program against just like you would a local server. If you need more than 1 GB of RAM and 4 GB of disk, you can provide a credit card and upgrade to a paid cluster or clusters.

Note that if you create a free cluster and stop using it, Qdrant will email you about “Cluster inactivity.” They’ll suspend the cluster if it continues to be idle, and eventually delete it. Your possible actions to avoid deletion are to use the free cluster or upgrade it to a paid cluster.

Qdrant use cases

The major use case for Qdrant are retrieval-augmented generation, advanced search, data analysis and anomaly detection, and recommendation systems. Qdrant supplies efficient nearest neighbor search and payload filtering for retrieval-augmented generation. For advanced search, Qdrant processes high-dimensional data, enables nuanced similarity searches, and understands semantics in depth, as well as handling multimodal data with fast and accurate search algorithms.

Qdrant improves data analysis and anomaly detection by using vector search to quickly identify patterns and outliers in complex data sets, which supports real-time anomaly detection for critical applications. Qdrant has a flexible Recommendation API that features options such as a best score recommendation strategy, which allows you to use multiple vectors in a single query to improve result relevancy.

Qdrant integrations

Qdrant is integrated with popular frameworks such as LangChain, LlamaIndex, and Haystack. Qdrant partners with LLM providers such as OpenAI, Cohere, Gemini, and Mistral AI.

Examples and/or documentation of Qdrant integrations include:

- Cohere – Use Cohere embeddings with Qdrant (blogpost)

- DocArray – Use Qdrant as a document store in DocArray

- Haystack – Use Qdrant as a document store with Haystack (blogpost)

- LangChain – Use Qdrant as a memory backend for LangChain (blogpost)

- LlamaIndex – Use Qdrant as a vector store with LlamaIndex

- OpenAI – ChatGPT retrieval plugin – Use Qdrant as a memory back end for ChatGPT

- Microsoft Semantic Kernel – Use Qdrant as persistent memory with Semantic Kernel

Vector search benchmarks

Qdrant ran a bunch of benchmarks that compared Qdrant with open-source rivals Weaviate, Elasticsearch, Redis, and Milvus, each running on a single node with 100 search threads. You can explore the results online. While I rarely trust vendor benchmarks of other vendors’ products, Qdrant has gone to some trouble to make these reproducible.

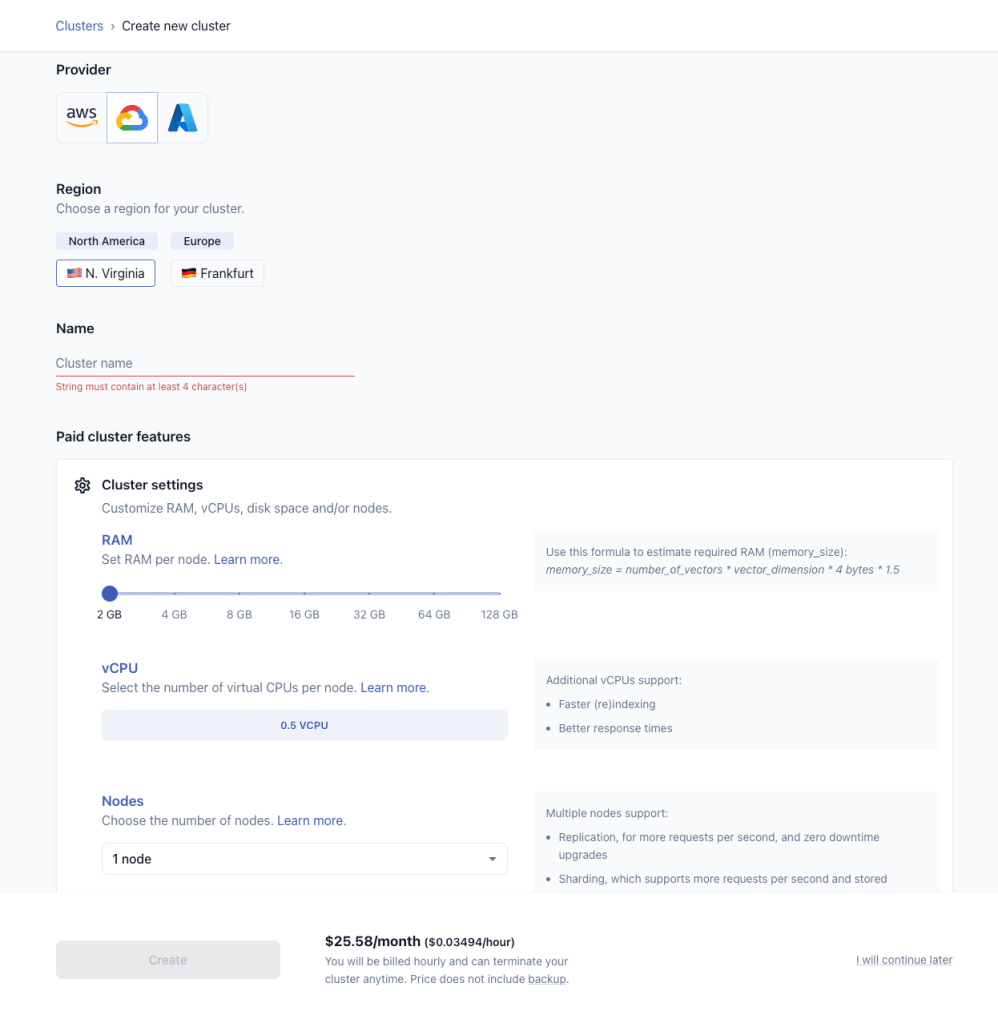

Qdrant Cloud

Qdrant Cloud runs fully managed clusters on multiple regions of Amazon Web Services, Google Cloud Platform, and Microsoft Azure. It offers central cluster management, horizontal and vertical scaling, high availability, auto-healing, central monitoring, log management and alerting, backup & disaster recovery, zero-downtime upgrades, and unlimited users. The screenshots below illustrate many of Qdrant Cloud’s capabilities.

Creating a Qdrant cluster is a simple matter of filling out a web form. This is a paid cluster, which has more options than a free cluster. Google Cloud Platform is currently the least expensive of the three supported cloud providers.

IDG

The collections I currently have loaded into my free Qdrant Cloud cluster came from running demo or tutorial code, or from loading sample data sets.

IDG

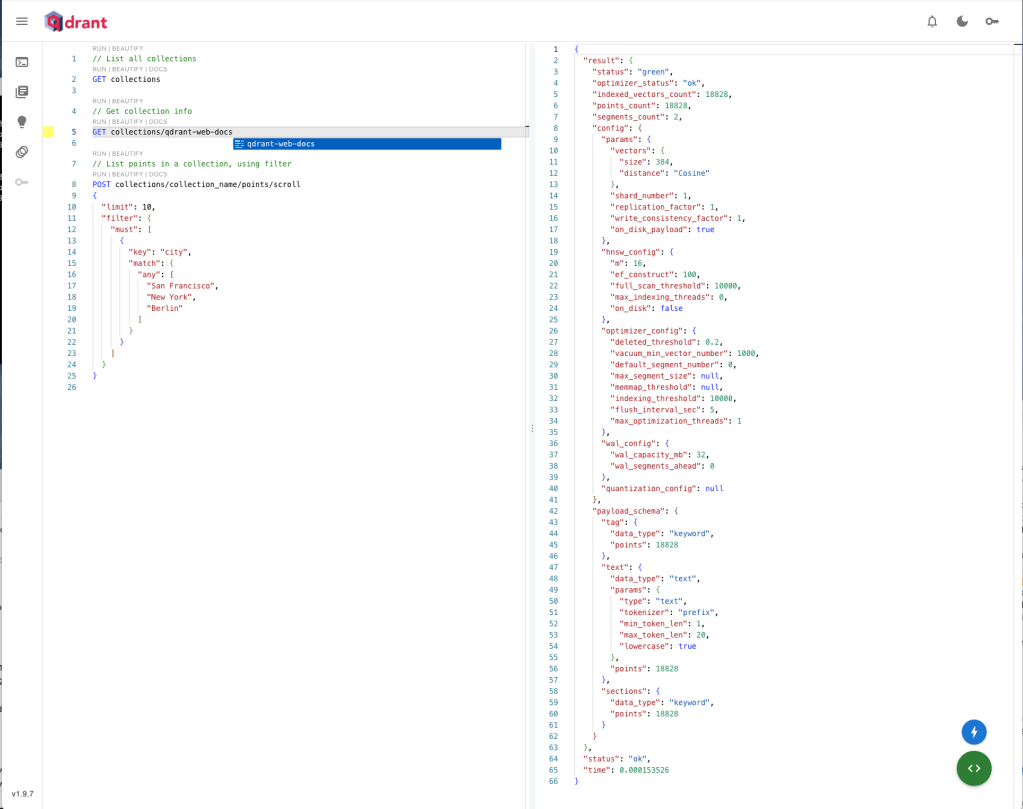

The Qdrant Cloud console allows you to run requests against your clusters interactively. Notice the Run|Beautify|Docs links above the code. The results of the most recent request are displayed at the right. The buttons at the lower right bring up additional commands.

IDG

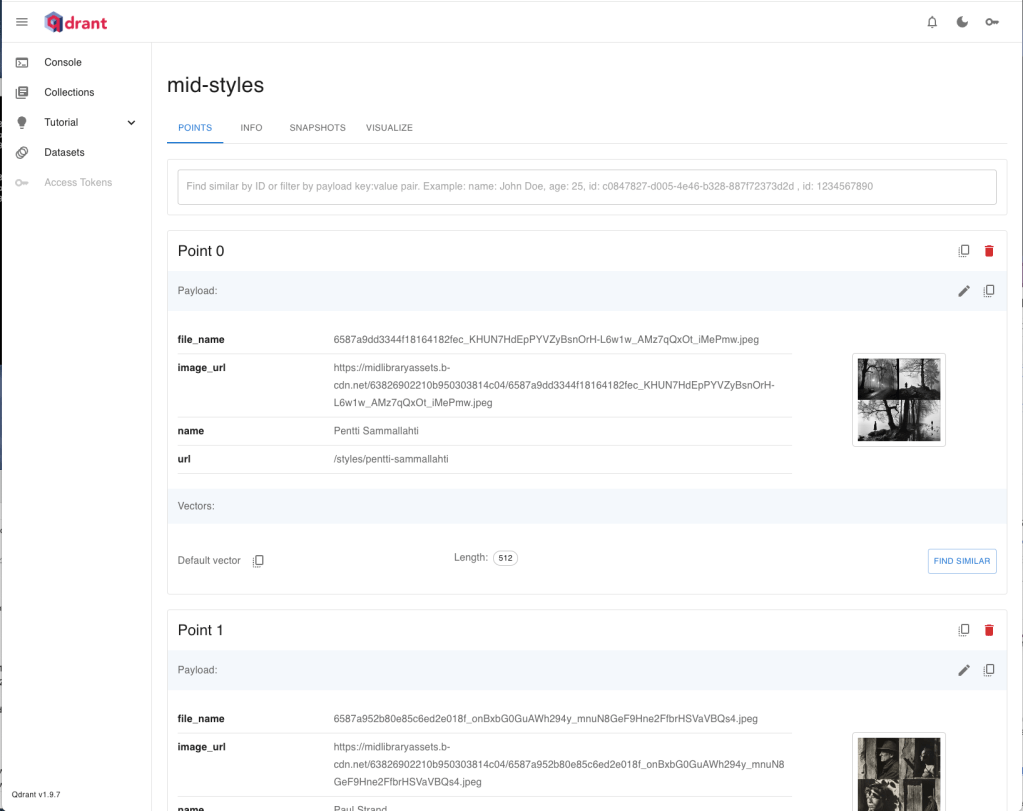

Here we are viewing the points in the mid-styles collection, which include the links to images in their payloads. Note that the vectors are not displayed. You wouldn’t want to look at 512-length vectors in the web interface, but if you need to see them you can copy them and paste them into an editor. You can perform a vector similarity search from any point by clicking the “Find Similar” button at the right.

IDG

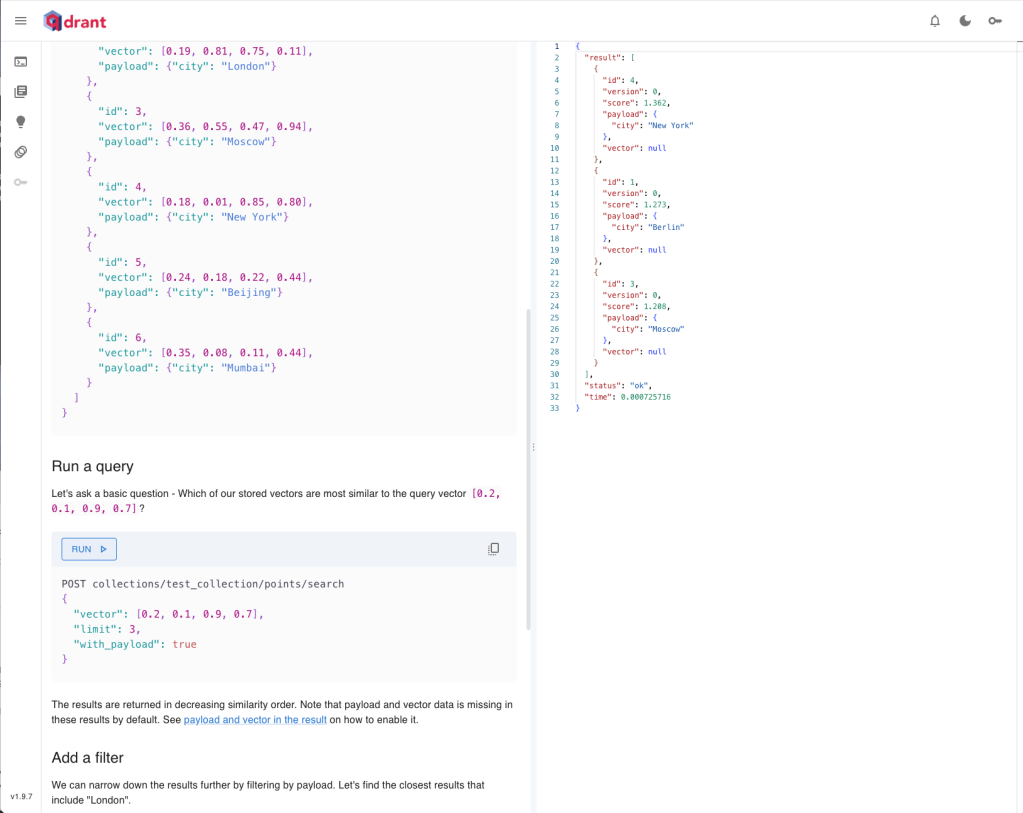

The Qdrant Cloud interactive tutorial provides a console with the code already loaded. You can click on the Run button for each block to proceed through the steps. The result displayed at the right is from the simple Post vector search query at the left.

IDG

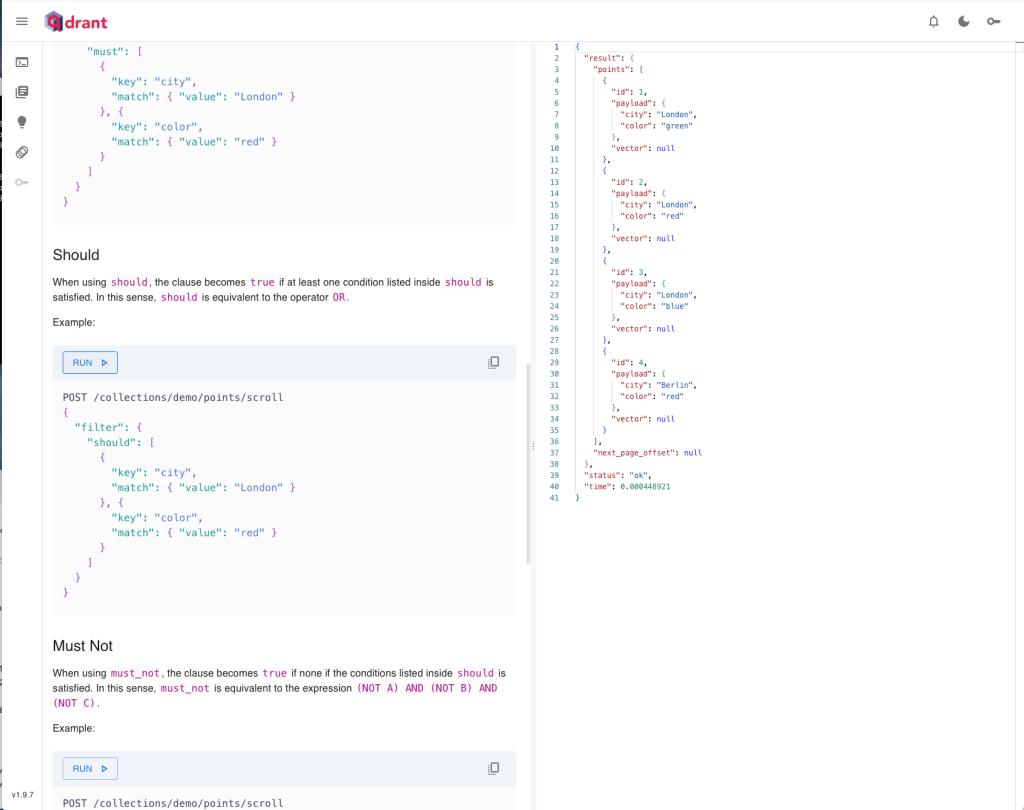

Qdrant allows filtering by payload as well as vector search, and can do both simultaneously. Filter keywords include “must,” which is a Boolean AND operator; “should,” which is a Boolean OR operator, and “must not,” which is a negation operator as explained at the lower left. The results at the right are from a “should” filter.

IDG

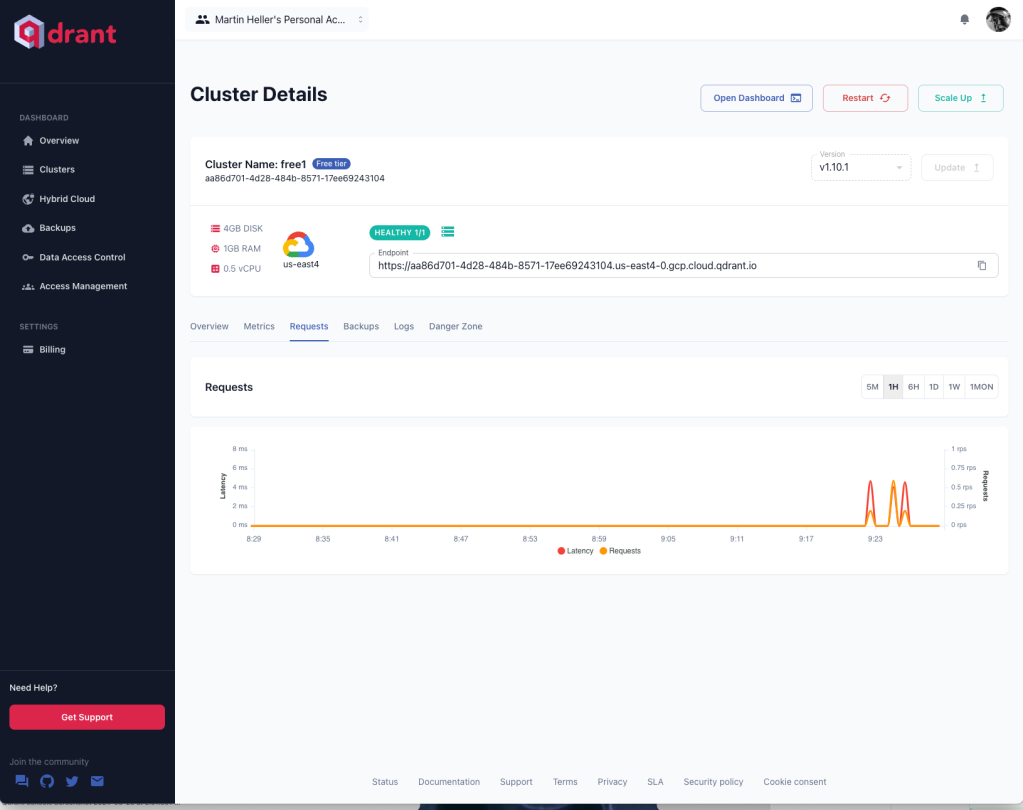

You can view the Qdrant Cloud cluster status from the cluster details. This is my free cluster, which as you can see is healthy and is only using a fraction of its capacity.

IDG

You can also view the requests to the cluster over time, and see their latency, which is very low for these requests because I’m just playing around interactively, not running an application with a heavy load.

IDG

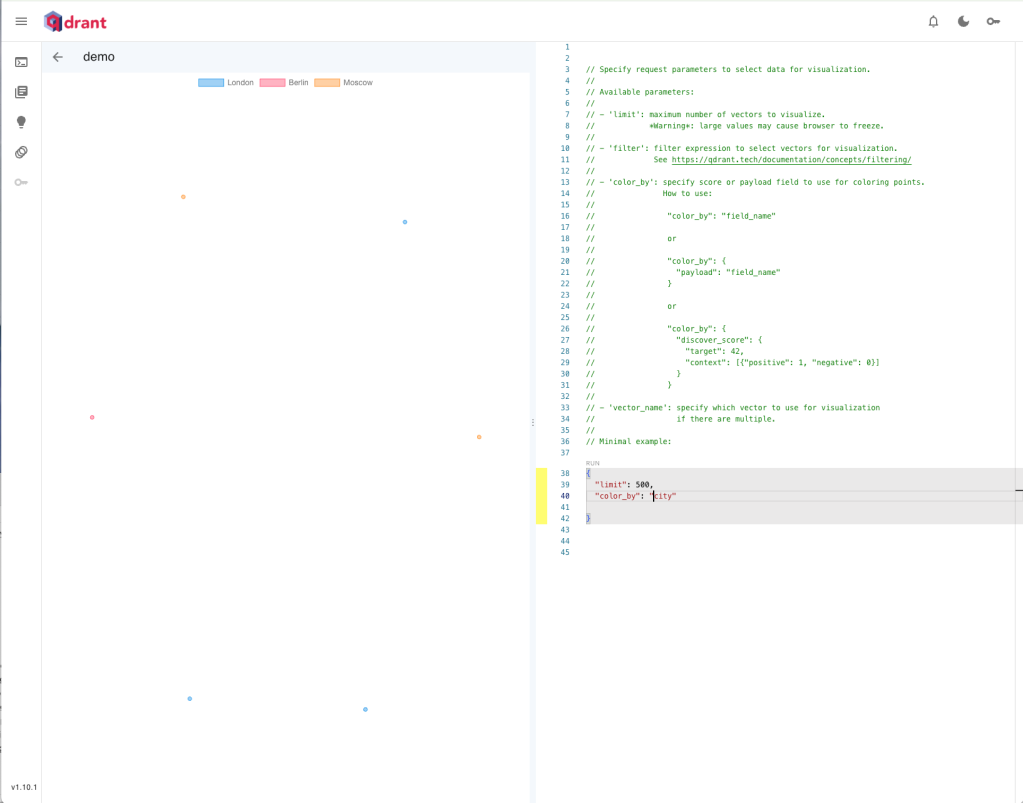

You can create a visualization of the points in a collection, filter them, limit the number of points displayed (important for large collections), and color the points based on the payload values. The visualization uses a t-SNE dimensionality reduction algorithm to display the high-dimensional vectors.

IDG

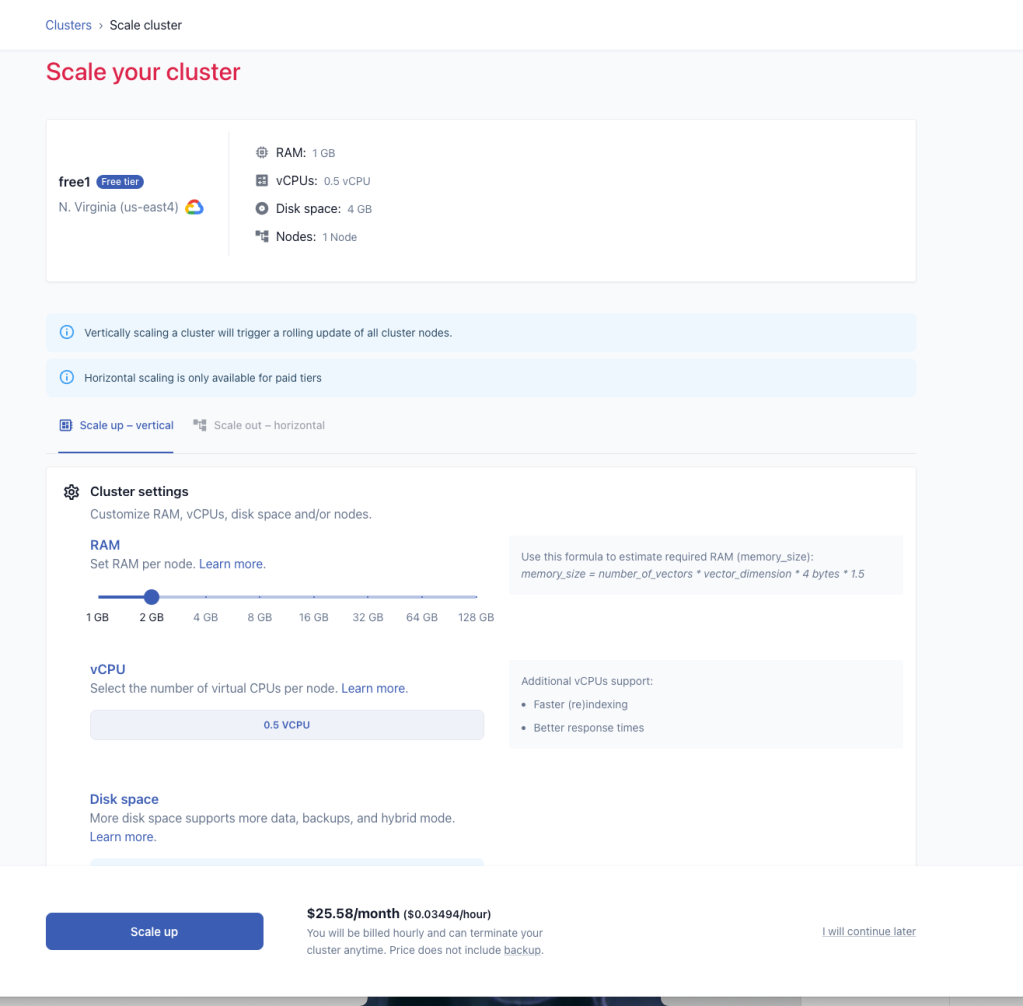

If your Qdrant Cloud cluster isn’t a good fit for your application, you can scale it horizontally and/or vertically. If you have more than one node in your cluster, you can scale it without incurring down time.

IDG

Qdrant hybrid and private clouds

You aren’t required to run Qdrant exclusively as SaaS on public clouds to use its own, very convenient managed cloud interface. You can also run Qdrant in Kubernetes clusters under your own control, either on-premises or in cloud instances, and connect those to the management interface running in the public cloud. Qdrant calls this hybrid cloud. In addition, you can run Qdrant in private cloud configurations, again either on-prem or in cloud instances, with the cluster management running in your own infrastructure, in the public cloud, on premises at the edge, and even fully air-gapped.

Learning Qdrant

Probably the easiest way to learn Qdrant is to work through its Quickstart, either locally or in the Qdrant Cloud. It’s also worthwhile to work through the Qdrant tutorials, which include semantic search and other relevant topics. Qdrant has both cloud-based data sets, accessible through the cloud interface once you have a (free) cluster running, and other practice data sets, to help you figure things out before embedding and loading up your own data. In addition, Qdrant has blog posts that explain relevant topics, and many resources about retrieval-augmented generation.

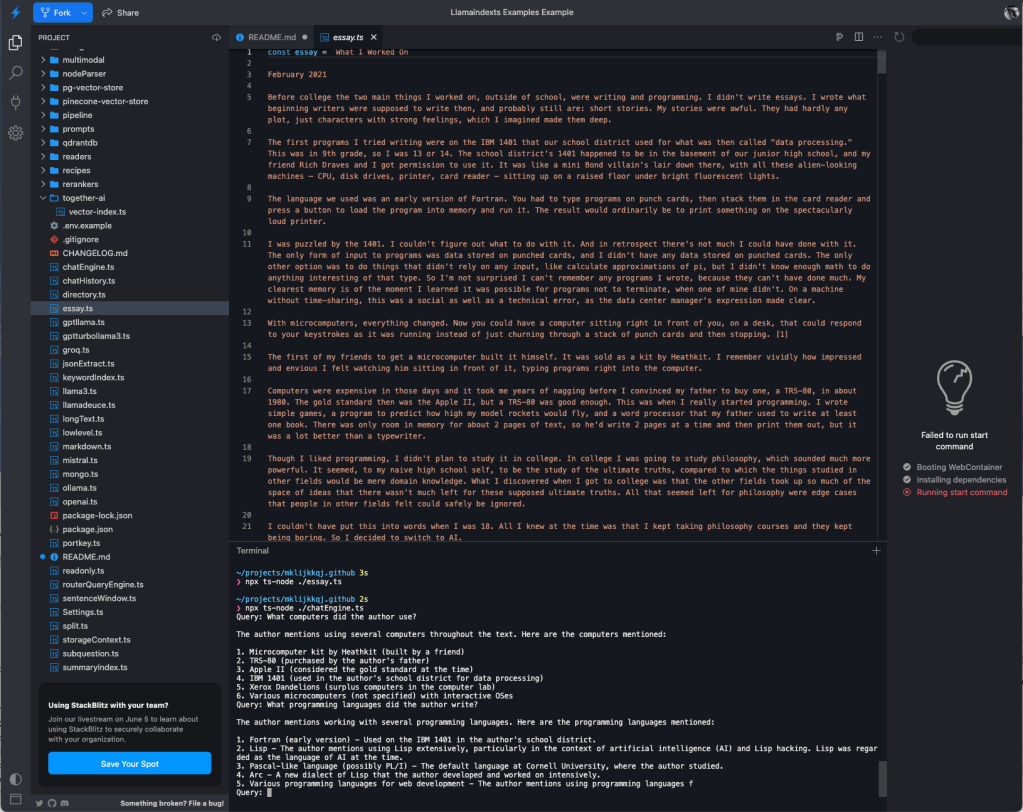

My first experience with Qdrant was actually while learning about LlamaIndex. When I was working through the LlamaIndex TypeScript samples, I discovered that the runnable code on StackBlitz was using Qdrant in a container to service its vector search function for RAG. It just worked.

Retrieval-augmented generation example using LlamaIndex, OpenAI, and Qdrant. Qdrant is running in a container within the StackBlitz environment to provide vector search; it just worked. After setting up the terminal environment with my OpenAI key, I ran essay.ts to generate an essay file and chatEngine.ts to field queries about the essay.

IDG

Qdrant is a good choice for your vector search needs, especially if you need to use vector search in production for semantic search or retrieval-augmented generation. It’s not the only choice available, by any means, but it may well fit your requirements.

Pros

- Efficient open-source vector search implementation for containers and Kubernetes

- Convenient SaaS clustered cloud implementation

- Benchmarks favorably against other open-source vector search products

- Plenty of integrations and language drivers

- Many options to tweak to optimize for your application

Cons

- Has a significant learning curve

- Has so many options that it’s easy to become confused

Cost

Qdrant open source: free. Qdrant Cloud: free starter cluster forever with 1 GB RAM; for additional RAM, backups, and replication, see https://cloud.qdrant.io/calculator to estimate costs, or create a standard cluster to get a firm price starting at $0.03494 per hour (about $25/node/month). Hybrid Cloud: starts at $0.014 per hour. Private Cloud: price on request.

Platform

Free open source locally: Docker or Python for server; Python, Go, Rust, JavaScript, TypeScript, .NET/C#, Java, Elixir, PHP, Ruby, or Java for client. Qdrant Cloud: Amazon Web Services, Google Cloud Platform, or Microsoft Azure. Qdrant Hybrid Cloud: Kubernetes.