Sometimes I hear from tech leads that they would like to improve visibility and governance over their generative artificial intelligence applications. How do you monitor and govern the usage and generation of data to address issues regarding security, resilience, privacy, and accuracy or to validate against best practices of responsible AI, among other things? Beyond simply taking these into account during the implementation phase, how do you maintain long-term observability and carry out compliance checks throughout the software’s lifecycle?

Today, we are launching an update to the AWS Audit Manager generative AI best practice framework on AWS Audit Manager. This framework simplifies evidence collection and enables you to continually audit and monitor the compliance posture of your generative AI workloads through 110 standard controls which are pre-configured to implement best practice requirements. Some examples include gaining visibility into potential personally identifiable information (PII) data that may not have been anonymized before being used for training models, validating that multi-factor authentication (MFA) is enforced to gain access to any datasets used, and periodically testing backup versions of customized models to ensure they are reliable before a system outage, among many others. These controls perform their tasks by fetching compliance checks from AWS Config and AWS Security Hub, gathering user activity logs from AWS CloudTrail and capturing configuration data by making application programming interface (API) calls to relevant AWS services. You can also create your own custom controls if you need that level of flexibility.

Previously, the standard controls included with v1 were pre-configured to work with Amazon Bedrock and now, with this new version, Amazon SageMaker is also included as a data source so you may gain tighter control and visibility of your generative AI workloads on both Amazon Bedrock and Amazon SageMaker with less effort.

Enforcing best practices for generative AI workloads

The standard controls included in the “AWS generative AI best practices framework v2” are organized under domains named accuracy, fair, privacy, resilience, responsible, safe, secure and sustainable.

Controls may perform automated or manual checks or a mix of both. For example, there is a control which covers the enforcement of periodic reviews of a model’s accuracy over time. It automatically retrieves a list of relevant models by calling the Amazon Bedrock and SageMaker APIs, but then it requires manual evidence to be uploaded at certain times showing that a review has been conducted for each of them.

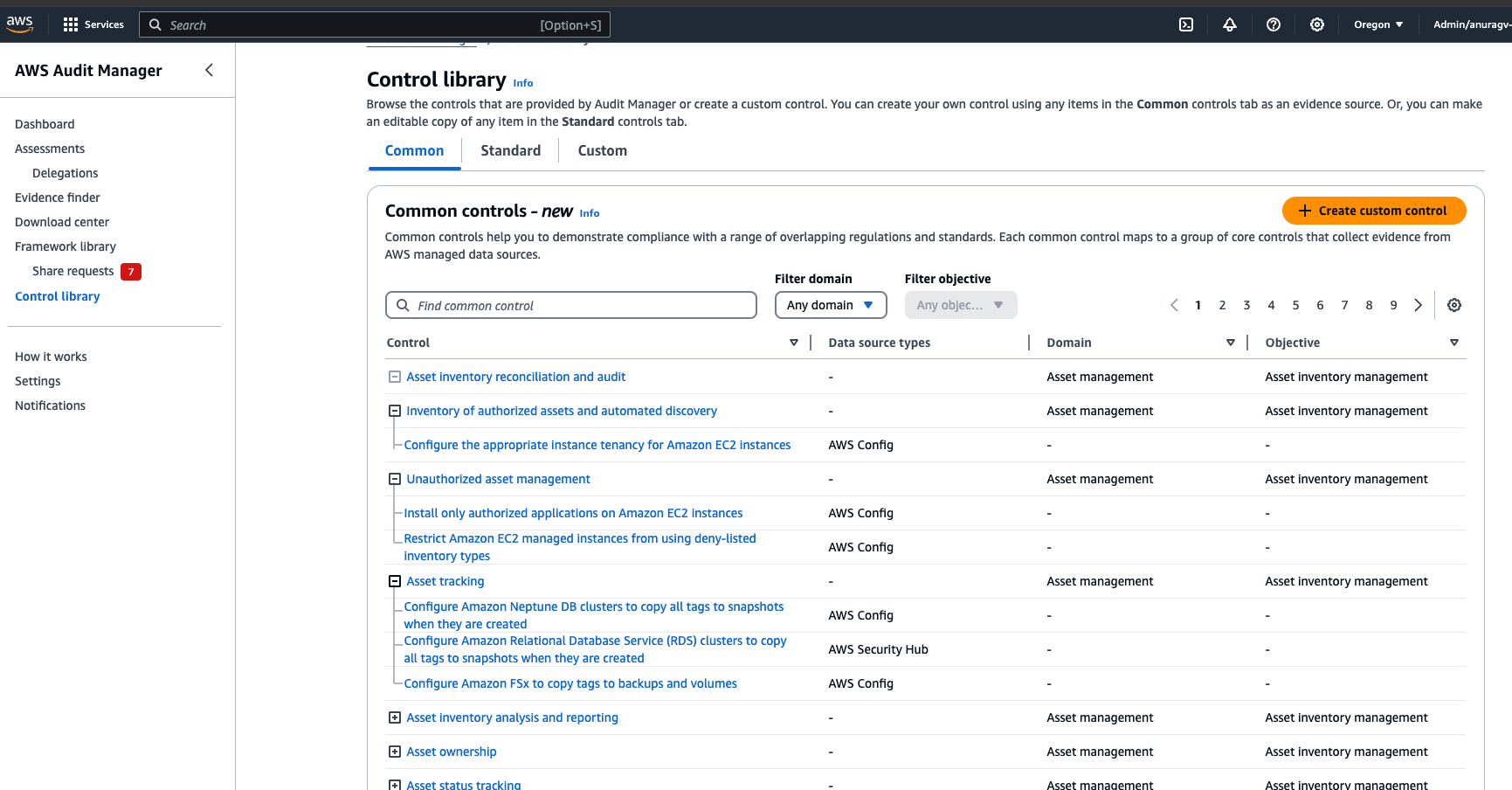

You can also customize the framework by including or excluding controls or customizing the pre-defined ones. This can be really helpful when you need to tailor the framework to meet regulations in different countries or update them as they change over time. You can even create your own controls from scratch though I would recommend you search the Audit Manager control library first for something that may be suitable or close enough to be used as a starting point as it could save you some time.

To get started you first need to create an assessment. Let’s walk through this process.

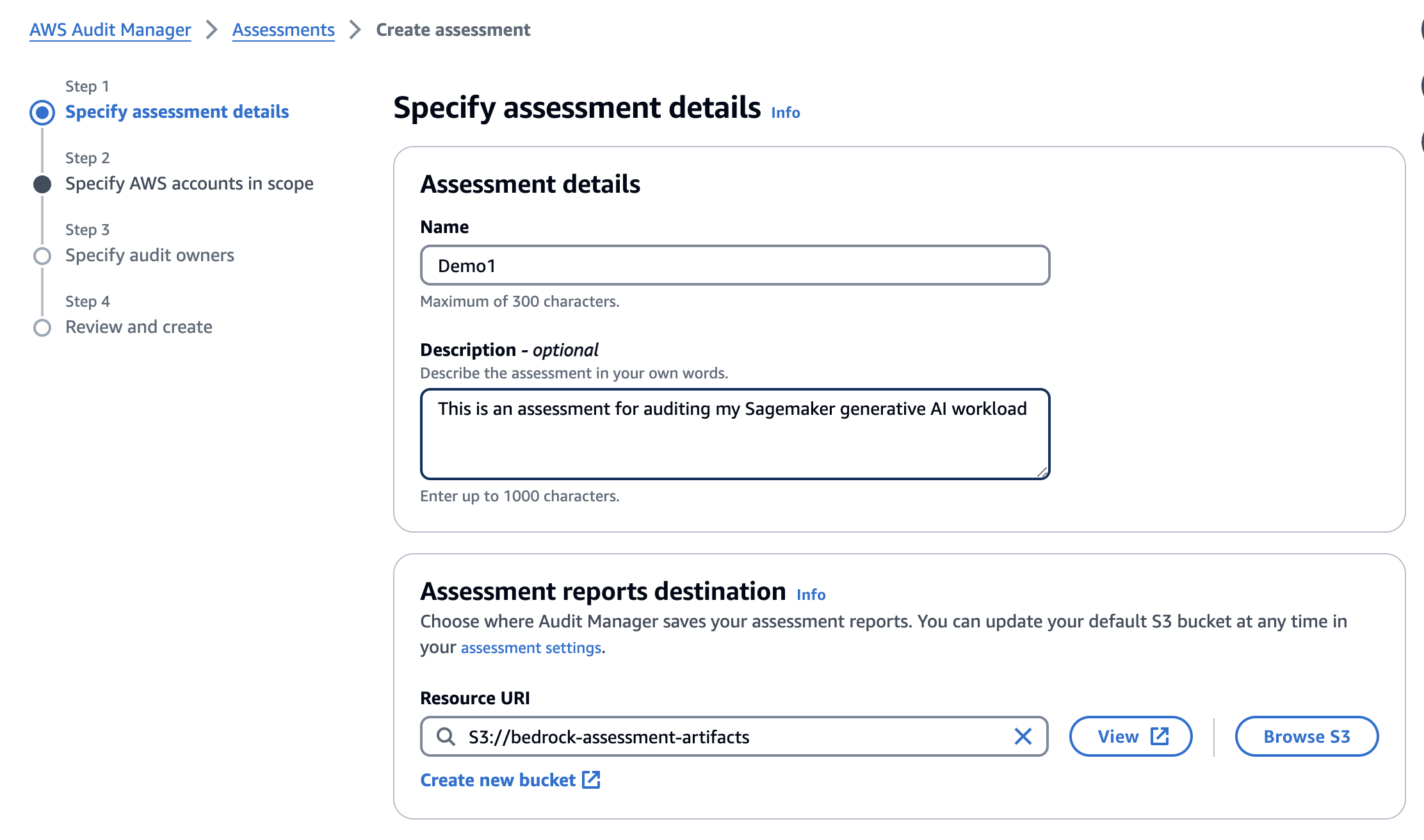

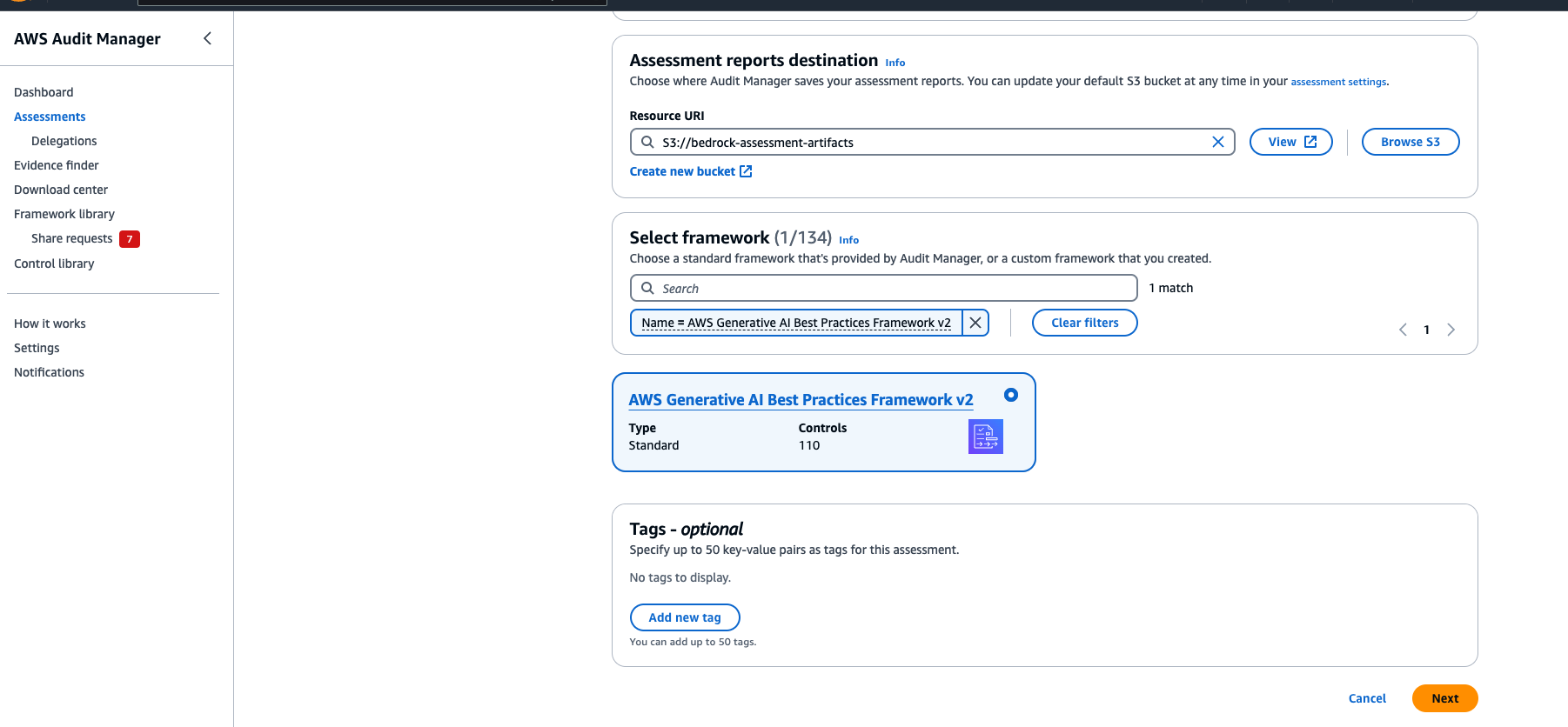

Step 1 – Assessment Details

Start by navigating to Audit Manager in the AWS Management Console and choose “Assessments”. Choose “Create assessment”; this takes you to the set up process.

Give your assessment a name. You can also add a description if you desire.

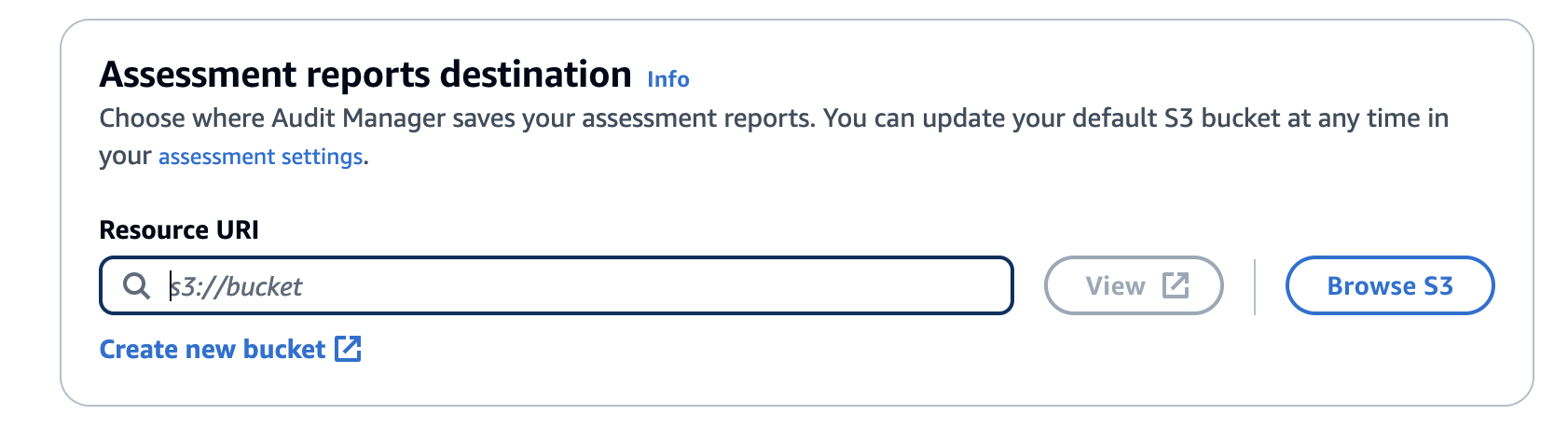

Next, pick an Amazon Simple Storage Service (S3) bucket where Audit Manager stores the assessment reports it generates. Note that you don’t have to select a bucket in the same AWS Region as the assessment, however, it is recommended since your assessment can collect up to 22,000 evidence items if you do so, whereas if you use a cross-Region bucket then that quota is significantly reduced to 3,500 items.

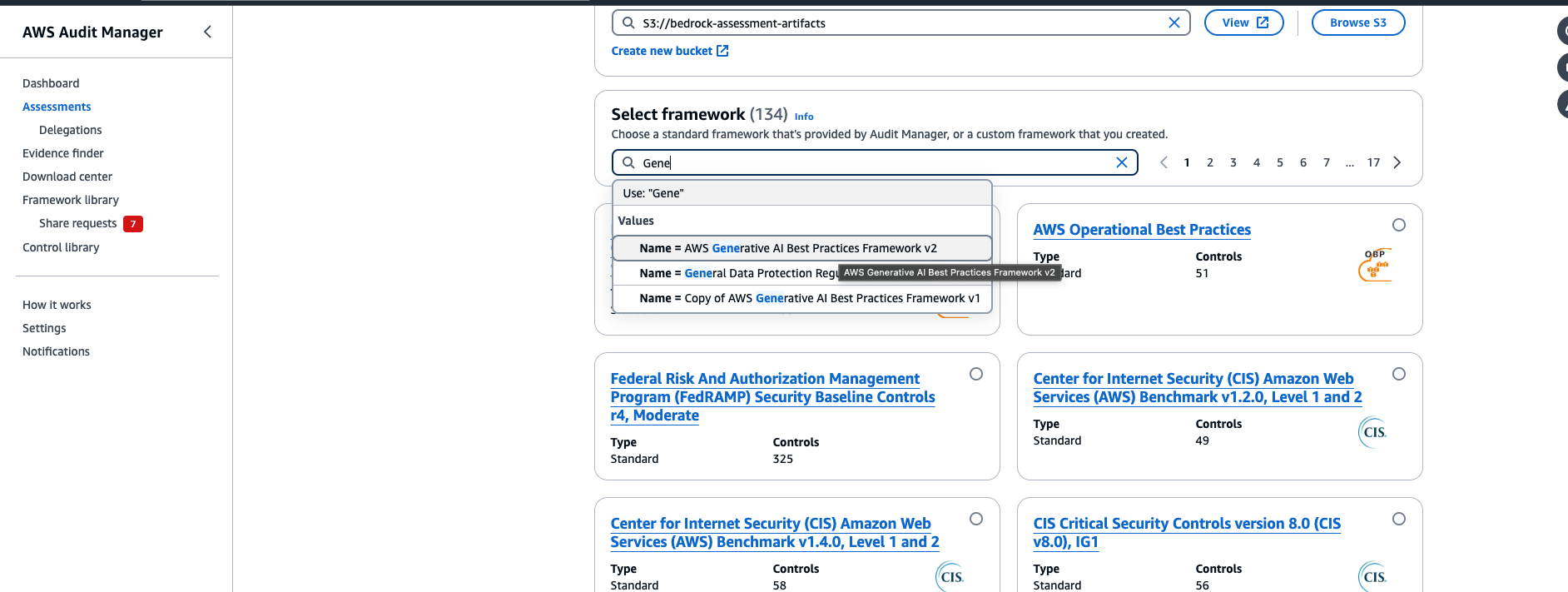

Next, we need to pick the framework we want to use. A framework effectively works as a template enabling all of its controls for use in your assessment.

In this case, we want to use the “AWS generative AI best practices framework v2” framework. Use the search box and click on the matched result that pops up to activate the filter.

You then should see the framework’s card appear .You can choose the framework’s title, if you wish, to learn more about it and browse through all the included controls.

Select it by choosing the radio button in the card.

You now have an opportunity to tag your assessment. Like any other resources, I recommend you tag this with meaningful metadata so review Best Practices for Tagging AWS Resources if you need some guidance.

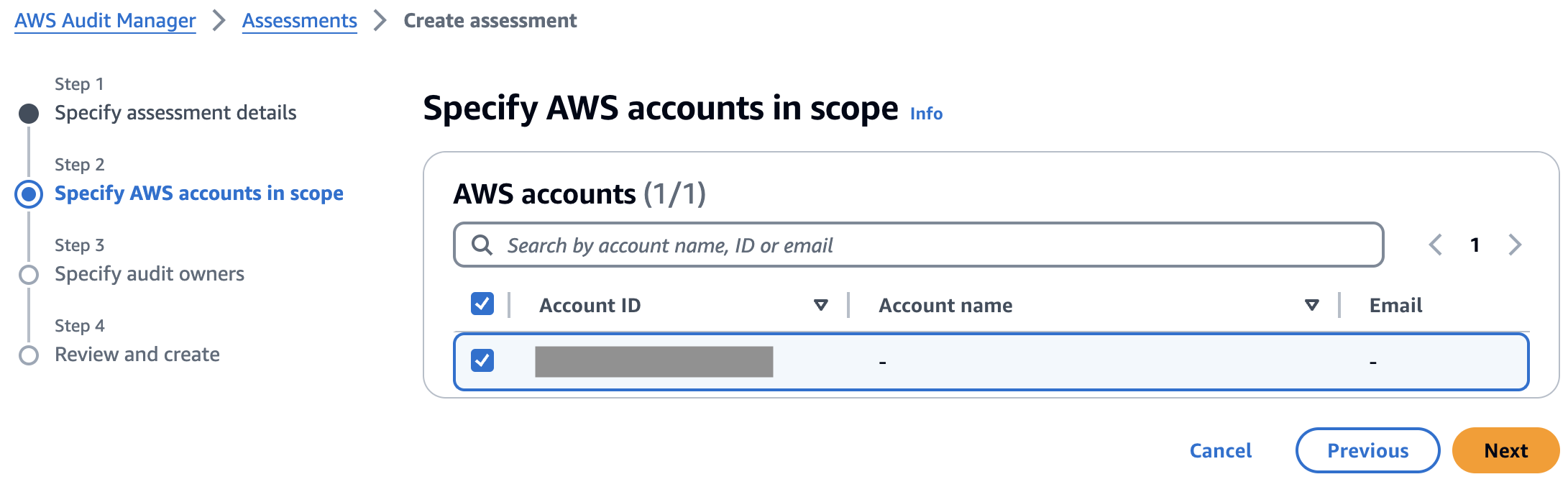

Step 2 – Specify AWS accounts in scope

This screen is quite straight-forward. Just pick the AWS accounts that you want to be continuously evaluated by the controls in your assessment. It displays the AWS account that you are currently using, by default. Audit Manager does support running assessments against multiple accounts and consolidating the report into one AWS account, however, you must explicitly enable integration with AWS Organizations first, if you would like to use that feature.

I select my own account as listed and choose “Next”

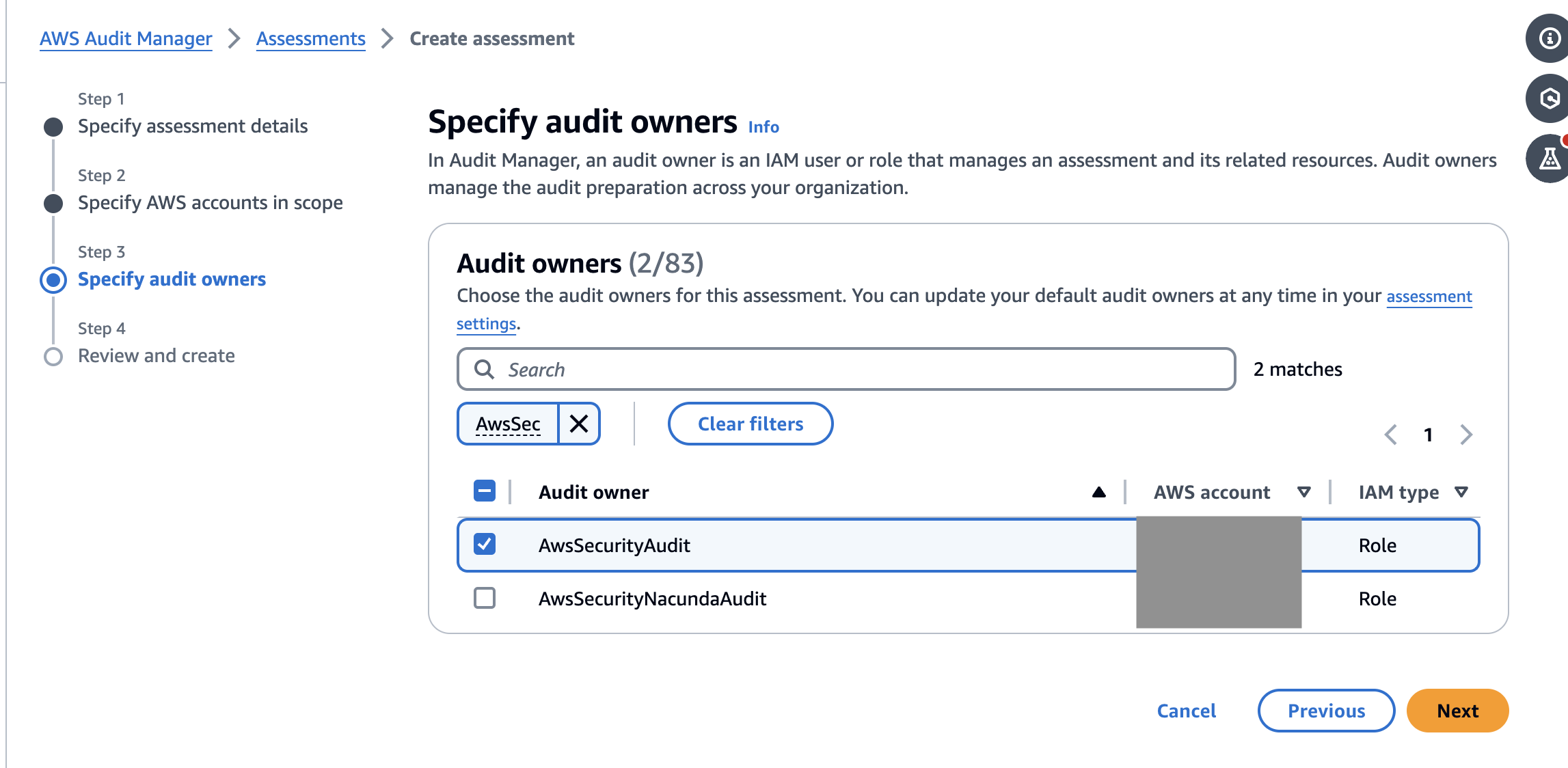

Step 3 – Specify audit owners

Now we just need to select IAM users who should have full permissions to use and manage this assessment. It’s as simple as it sounds. Pick from a list of identity and access management (IAM) users or roles available or search using the box. It’s recommended that you use the AWSAuditManagerAdministratorAccess policy.

You must select at least one, even if it’s yourself which is what I do here.

Step 4 – Review and create

All that is left to do now is review your choices and click on “Create assessment” to complete the process.

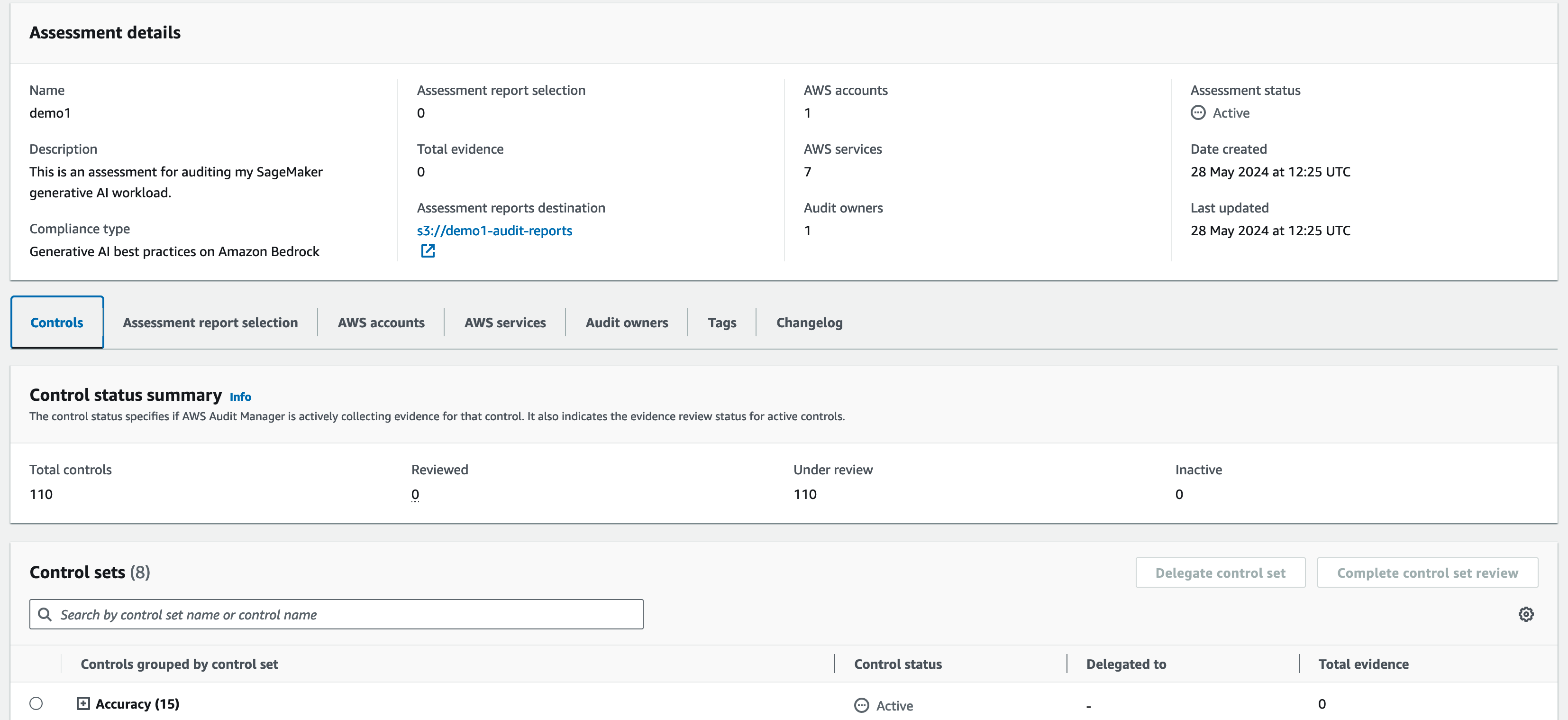

Once the assessment is created, Audit Manager starts collecting evidence in the selected AWS accounts and you start generating reports as well as surfacing any non-compliant resources in the summary screen. Keep in mind that it may take up to 24 hours for the first evaluation to show up.

You can visit the assessment details screen at any time to inspect the status for any of the controls.

Conclusion

The “AWS generative AI best practices framework v2” is available today in the AWS Audit Manager framework library in all AWS Regions where Amazon Bedrock and Amazon SageMaker are available.

You can check whether Audit Manager is available in your preferred Region by visiting AWS Services by Region.

If you want to dive deeper, check out a step-by-step guide on how to get started.