I am pleased to announce the availability of local clusters for Amazon Elastic Kubernetes Service (Amazon EKS) on AWS Outposts. It means that starting today, you can deploy your Amazon EKS cluster entirely on Outposts: both the Kubernetes control plane and the nodes.

Amazon EKS is a managed Kubernetes service that makes it easy for you to run Kubernetes on AWS and on premises. AWS Outposts is a family of fully managed solutions delivering AWS infrastructure and services to virtually any on-premises or edge location for a truly consistent hybrid experience.

To fully understand the benefits of local clusters for Amazon EKS on Outposts, I need to first share a bit of background.

Some customers use Outposts to deploy Kubernetes cluster nodes and pods close to the rest of their on-premises infrastructure. This allows their applications to benefit from low latency access to on-premises services and data while managing the cluster and the lifecycle of the nodes using the same AWS API, CLI, or AWS console as they do for their cloud-based clusters.

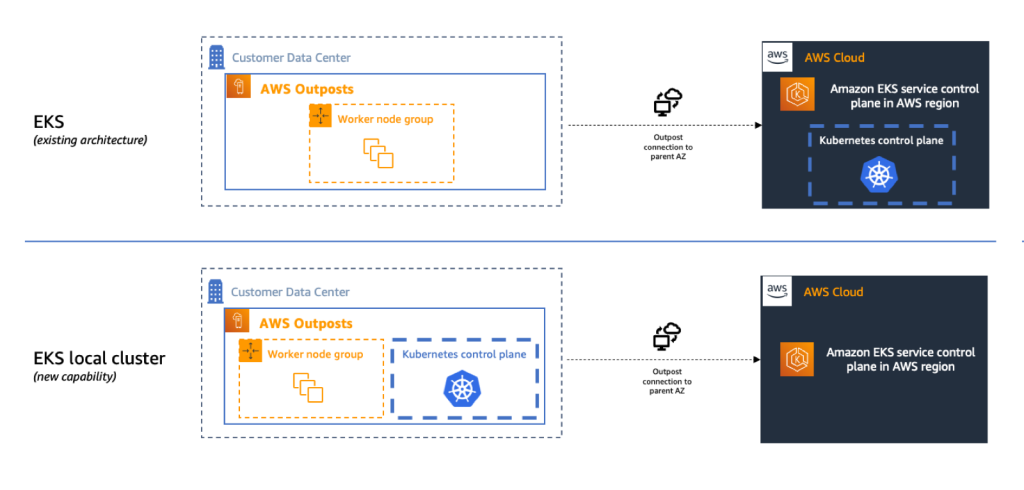

Until today, when you deployed Kubernetes applications on Outposts, you typically started by creating an Amazon EKS cluster in the AWS cloud. Then you deployed the cluster nodes on your Outposts machines. In this hybrid cluster scenario, the Kubernetes control plane runs in the parent Region of your Outposts, and the nodes are running on your on-premises Outposts. The Amazon EKS service communicates through the network with the nodes running on the Outposts machine.

But, remember: everything fails all the time. Customers told us the main challenge they have in this scenario is to manage site disconnections. This is something we cannot control, especially when you deploy Outposts on rough edges: areas with poor or intermittent network connections. When the on-premises facility is temporarily disconnected from the internet, the Amazon EKS control plane running in the cloud is unable to communicate with the nodes and the pods. Although the nodes and pods work perfectly and continue to serve the application on the on-premises local network, Kubernetes may consider them unhealthy and schedule them for replacement when the connection is reestablished (see pod eviction in Kubernetes documentation). This may lead to application downtimes when connectivity is restored.

I talked with Chris, our Kubernetes Product Manager and expert, while preparing this blog post. He told me there are at least seven distinct options to configure how a control plane reconnects to its nodes. Unless you master all these options, the system status at re-connection is unpredictable.

To simplify this, we are giving you the ability to host your entire Amazon EKS cluster on Outposts. In this configuration, both the Kubernetes control plane and your worker nodes run locally on premises on your Outposts machine. That way, your cluster continues to operate even in the event of a temporary drop in your service link connection. You can perform cluster operations such as creating, updating, and scaling applications during network disconnects to the cloud.

Local clusters are identical to Amazon EKS in the cloud and automatically deploy the latest security patches to make it easy for you to maintain an up-to-date, secure cluster. You can use the same tooling you use with Amazon EKS in the cloud and the AWS Management Console for a single interface for your clusters running on Outposts and in AWS Cloud.

Local clusters are identical to Amazon EKS in the cloud and automatically deploy the latest security patches to make it easy for you to maintain an up-to-date, secure cluster. You can use the same tooling you use with Amazon EKS in the cloud and the AWS Management Console for a single interface for your clusters running on Outposts and in AWS Cloud.

Let’s See It In Action

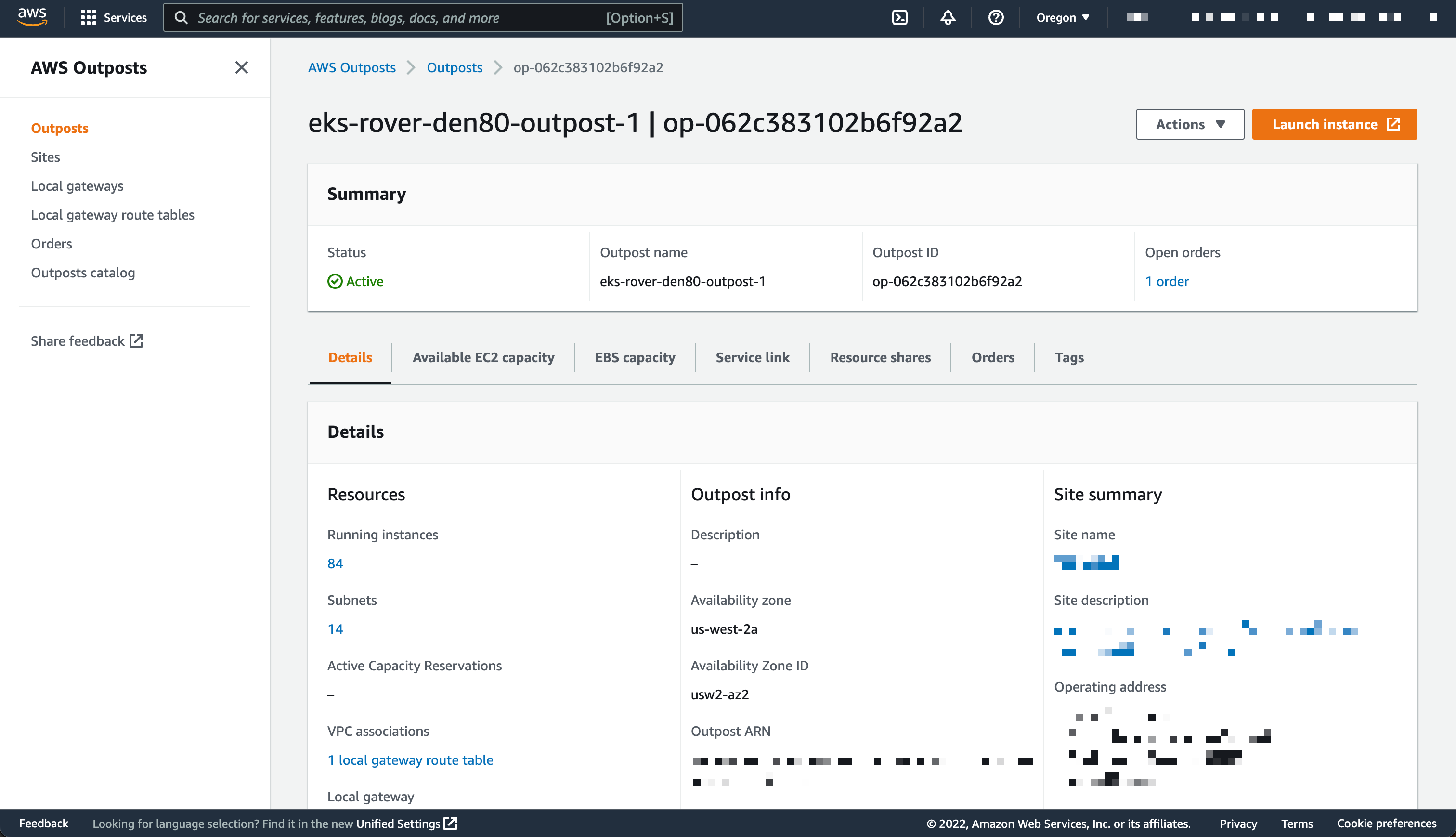

Let’s see how we can use this new capability. For this demo, I will deploy the Kubernetes control plane on Amazon Elastic Compute Cloud (Amazon EC2) instances running on premises on an Outposts rack.

I use an Outposts rack already configured. If you want to learn how to get started with Outposts, you can read the steps on the Get Started with AWS Outposts page.

This demo has two parts. First, I create the cluster. Second, I connect to the cluster and create nodes.

Creating Cluster

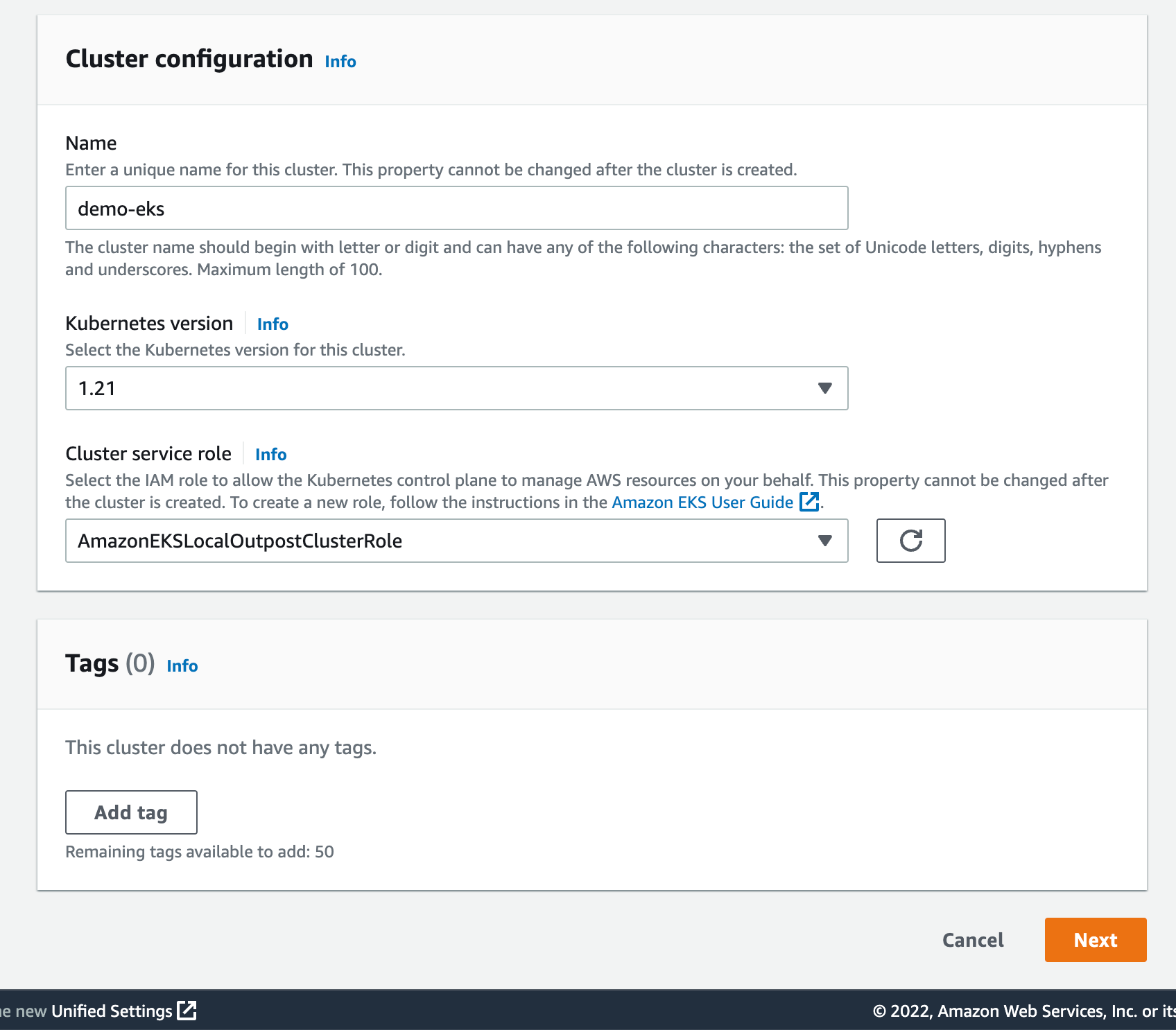

Before deploying the Amazon EKS local cluster on Outposts, I make sure I created an IAM cluster role and attached the AmazonEKSLocalOutpostClusterPolicy managed policy. This IAM cluster role will be used in cluster creation.

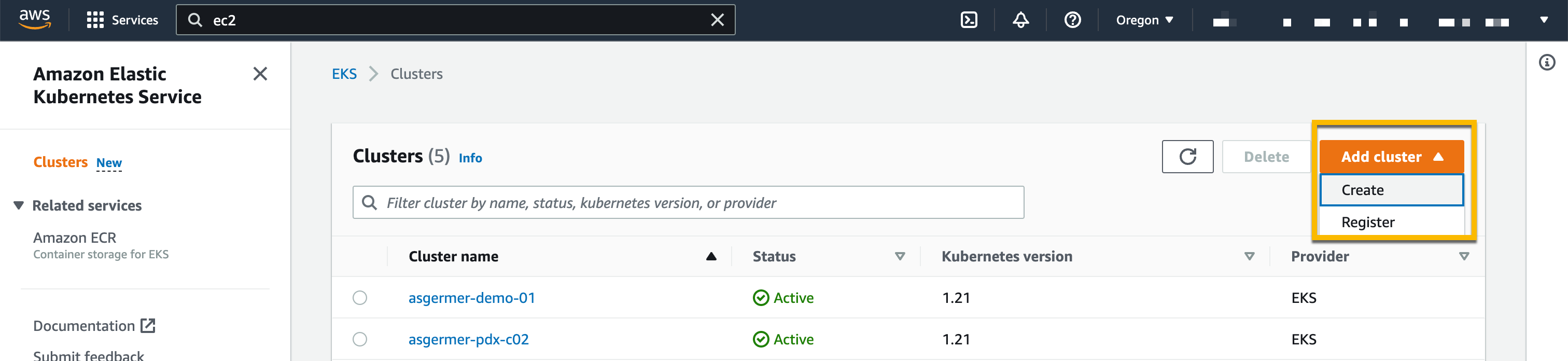

Then, I switch to the Amazon EKS dashboard, and I select Add Cluster, then Create.

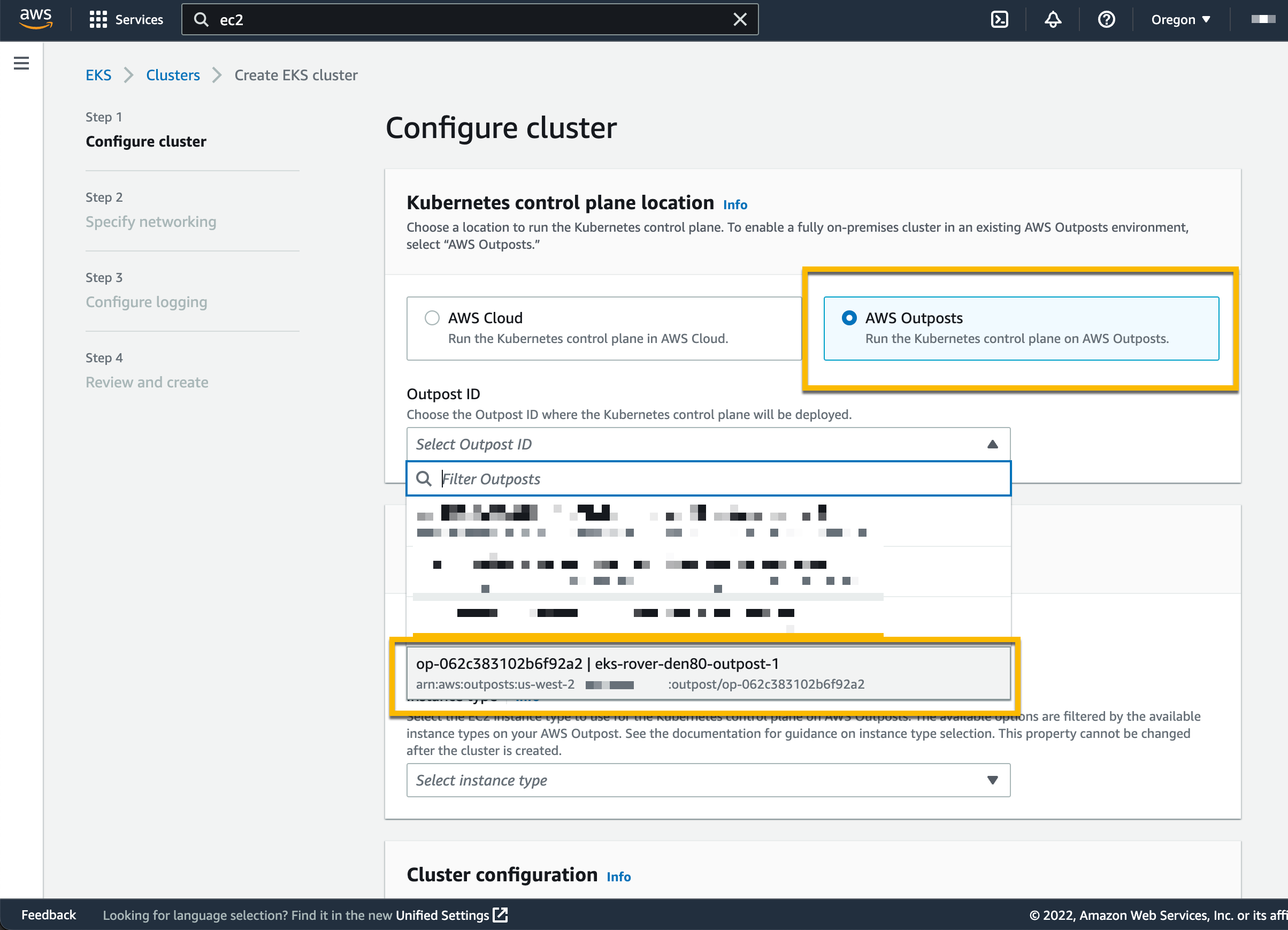

On the following page, I chose the location of the Kubernetes control plane: the AWS Cloud or AWS Outposts. I select AWS Outposts and specify the Outposts ID.

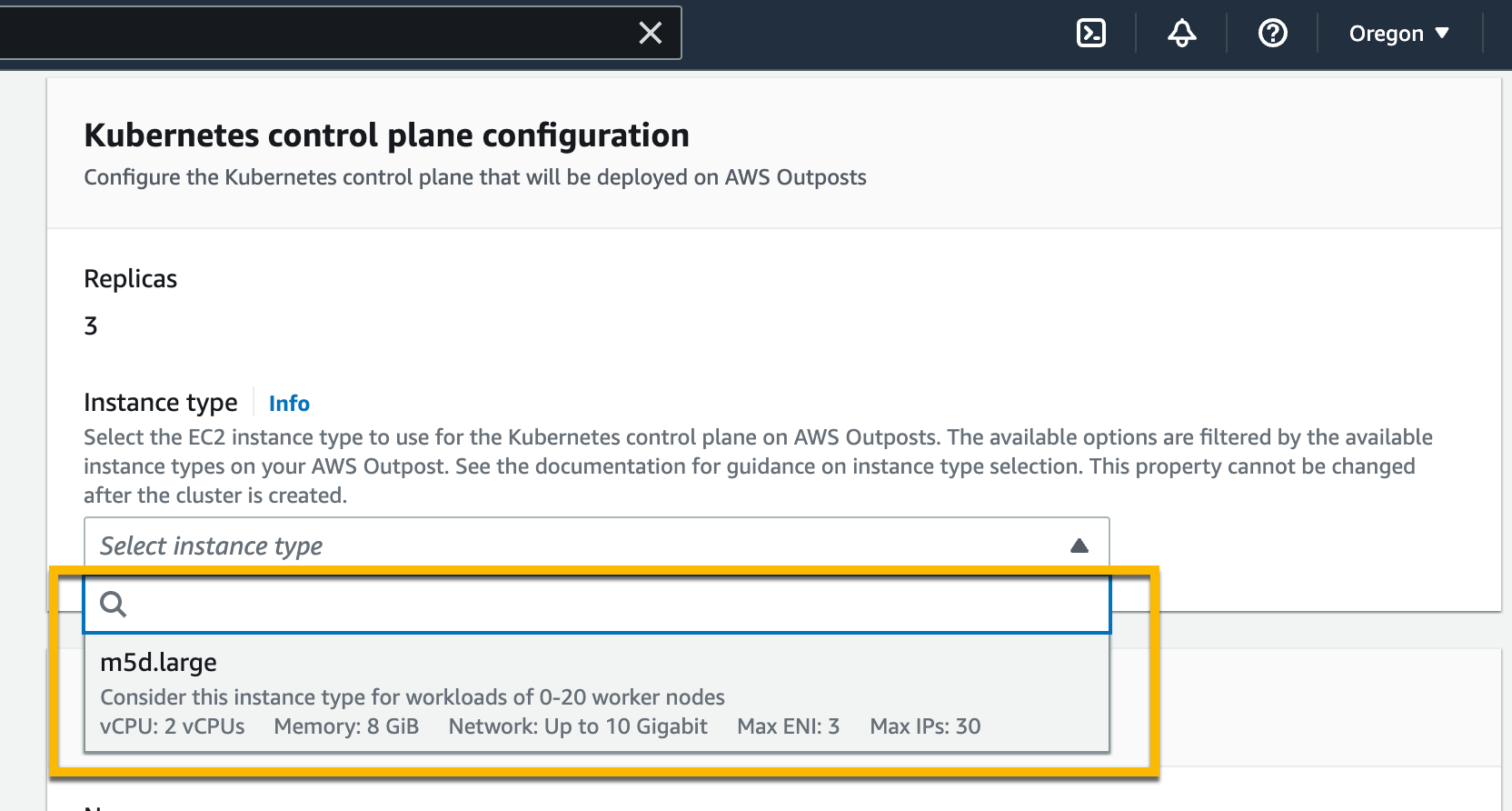

The Kubernetes control plane on Outposts is deployed on three EC2 instances for high availability. That’s why I see three Replicas. Then, I choose the instance type according to the number of worker nodes needed for workloads. For example, to handle 0–20 worker nodes, it is recommended to use m5d.large EC2 instances.

On the same page, I specify configuration values for the Kubernetes cluster, such as its Name, Kubernetes version, and the Cluster service role that I created earlier.

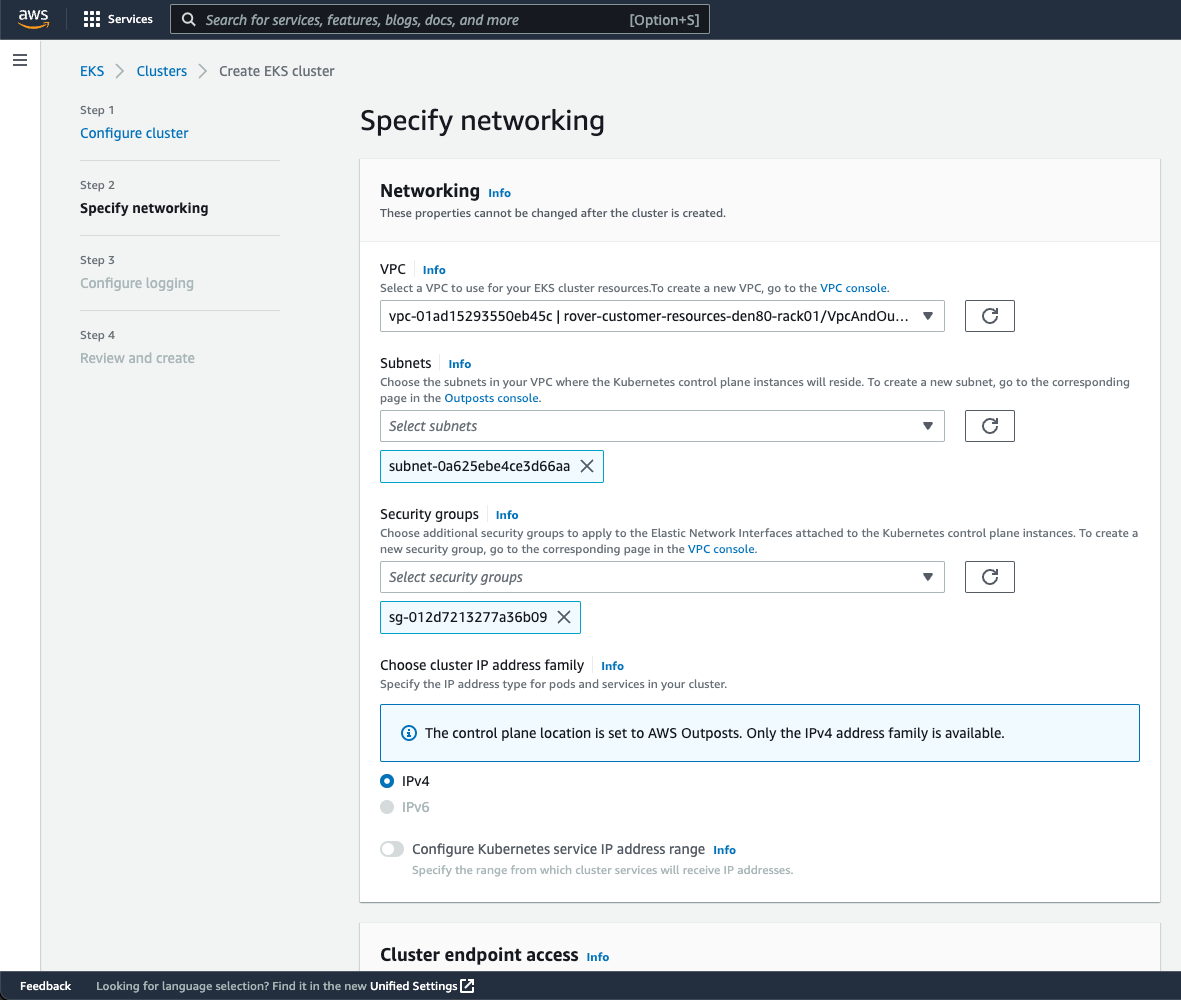

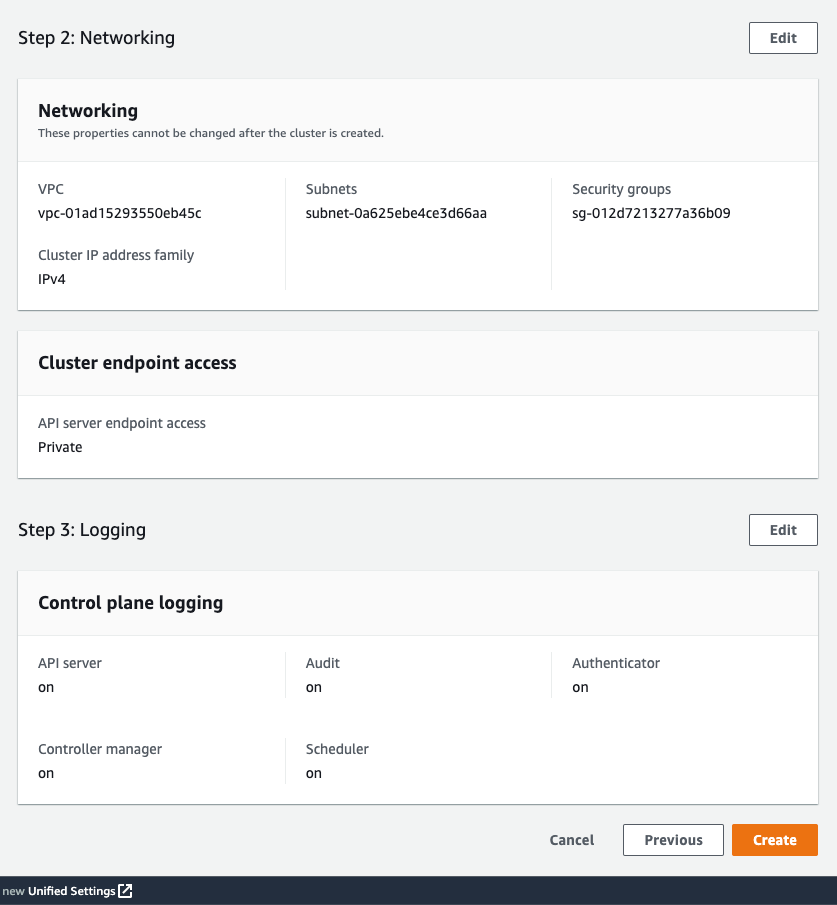

On the next page, I configure the networking options. Since Outposts is an extension of an AWS Region, I need to use the VPC and Subnets used by Outposts to enable communication between Kubernetes control plane and worker nodes. For Security Groups, Amazon EKS creates a security group for local clusters that enables communication between my cluster and my VPC. I can also define additional security groups according to my application requirements.

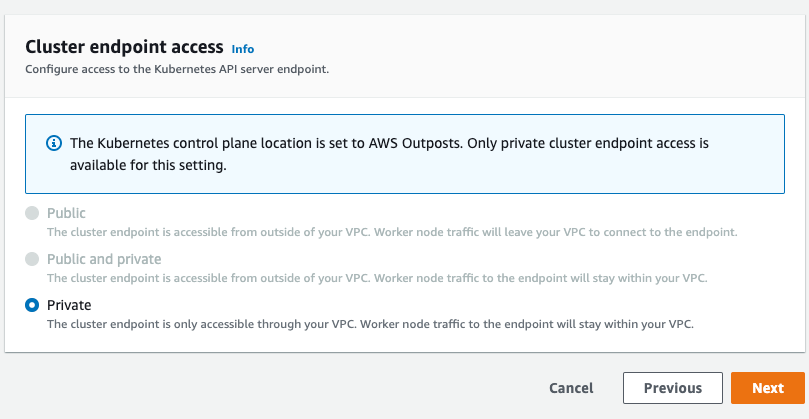

As we run the Kubernetes control plane inside Outposts, the Cluster endpoint access can only be accessed privately. This means I can only access the Kubernetes cluster through machines that are deployed in the same VPC or over the local network via the Outposts local gateway with Direct VPC Routing.

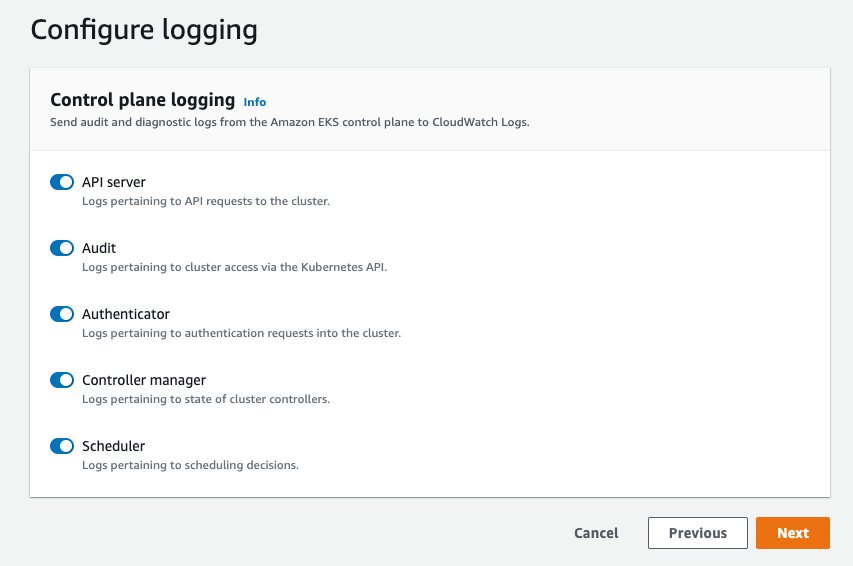

On the next page, I define logging. Logging is disabled by default, and I may enable it as needed. For more details about logging, you can read the Amazon EKS control plane logging documentation.

The last screen allows me to review all configuration options. When I’m satisfied with the configuration, I select Create to create the cluster.

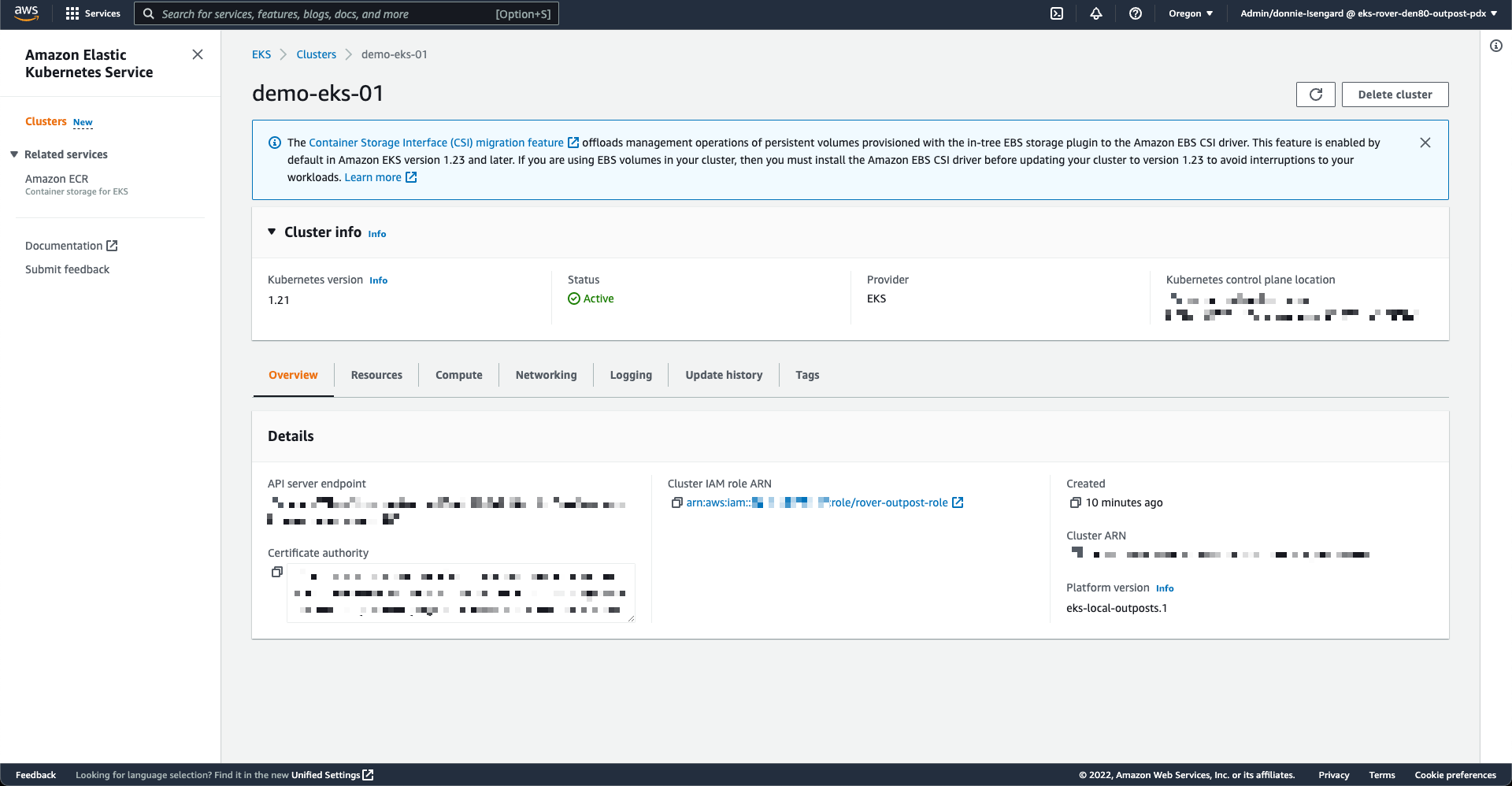

The cluster creation takes a few minutes. To check the cluster creation status, I can use the console or the terminal with the following command:

$ aws eks describe-cluster

--region <REGION_CODE>

--name <CLUSTER_NAME>

--query "cluster.status"The Status section tells me when the cluster is created and active.

In addition to using the AWS Management Console, I can also create a local cluster using the AWS CLI. Here is the command snippet to create a local cluster with the AWS CLI:

$ aws eks create-cluster

--region <REGION_CODE>

--name <CLUSTER_NAME>

--resources-vpc-config subnetIds=<SUBNET_ID>

--role-arn <ARN_CLUSTER_ROLE>

--outpost-config controlPlaneInstanceType=<INSTANCE_TYPE>

--outpostArns=<ARN_OUTPOST>Connecting to the Cluster

The endpoint access for a local cluster is private; therefore, I can access it from a local gateway with Direct VPC Routing or from machines that are in the same VPC. To find out how to use local gateways with Outposts, you can follow the information on the Working with local gateways page. For this demo, I use an EC2 instance as a bastion host, and I manage the Kubernetes cluster using kubectl command.

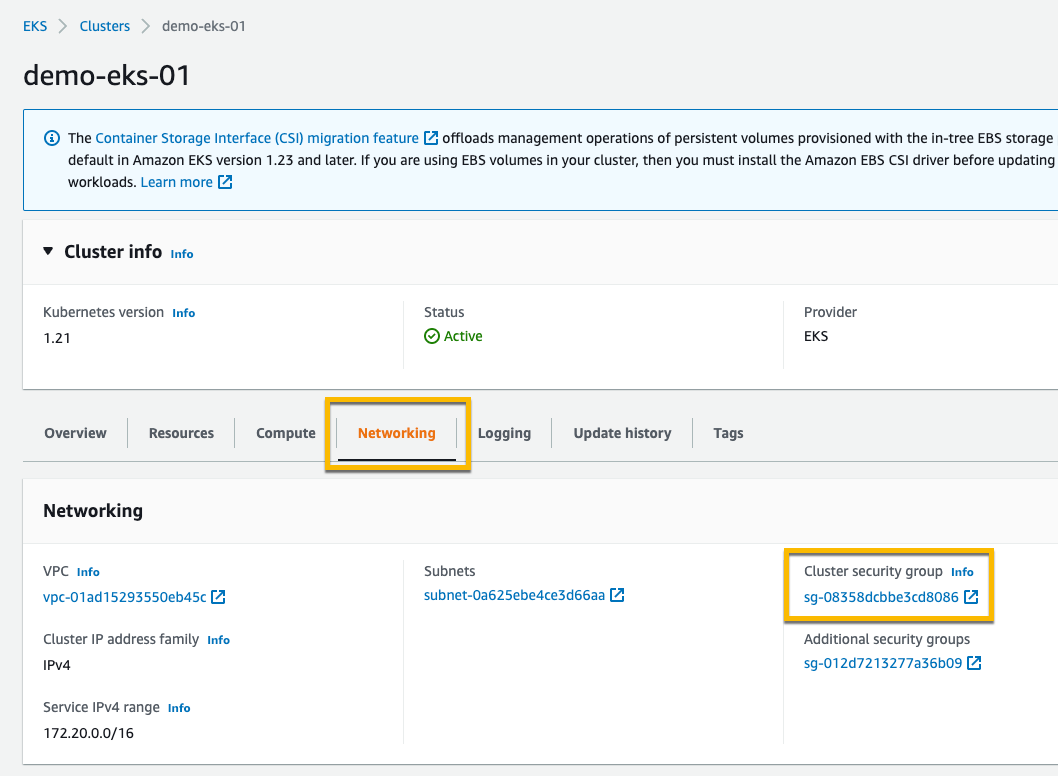

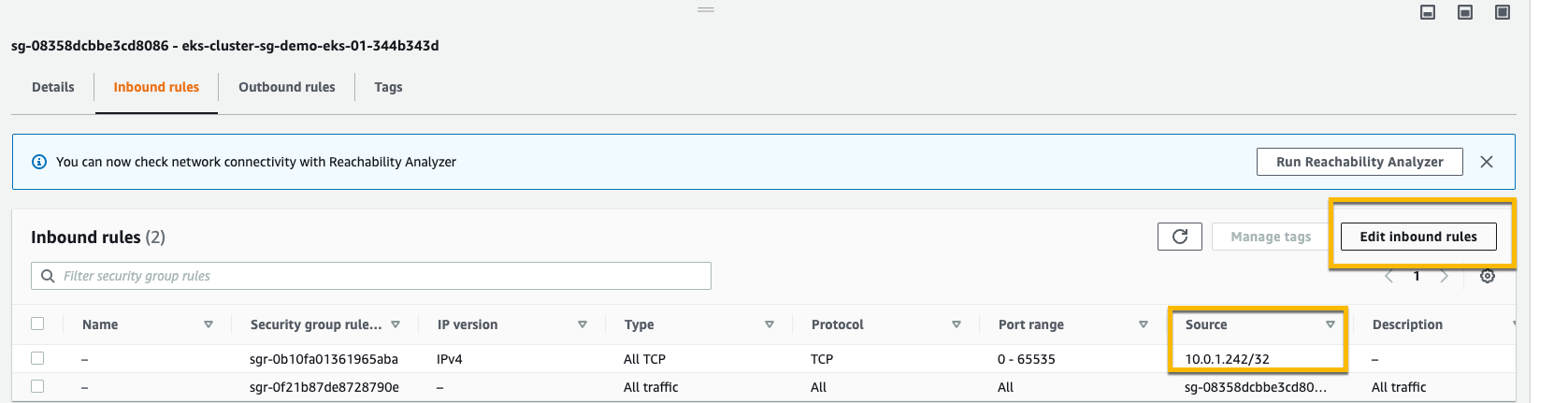

The first thing I do is edit Security Groups to open traffic access from the bastion host. I go to the detail page of the Kubernetes cluster and select the Networking tab. Then I select the link in Cluster security group.

Then, I add inbound rules, and I provide access for the bastion host by specifying its IP address.

Once I’ve allowed the access, I create kubeconfig in the bastion host by running the command:

$ aws eks update-kubeconfig --region <REGION_CODE> --name <CLUSTER_NAME>Finally, I use kubectl to interact with the Kubernetes API server, just like usual.

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-10-X-Y-Z.us-west-2.compute.internal NotReady control-plane,master 10h v1.21.13 10.X.Y.Z <none> Bottlerocket OS 1.8.0 (aws-k8s-1.21) 5.10.118 containerd://1.6.6+bottlerocket

ip-10-X-Y-Z.us-west-2.compute.internal NotReady control-plane,master 10h v1.21.13 10.X.Y.Z <none> Bottlerocket OS 1.8.0 (aws-k8s-1.21) 5.10.118 containerd://1.6.6+bottlerocket

ip-10-X-Y-Z.us-west-2.compute.internal NotReady control-plane,master 9h v1.21.13 10.X.Y.Z <none> Bottlerocket OS 1.8.0 (aws-k8s-1.21) 5.10.118 containerd://1.6.6+bottlerocketKubernetes local clusters running on AWS Outposts run on three EC2 instances. We see on the output above that the status of three worker nodes is NotReady. This is because they are used by the control plane exclusively, and we cannot use them to schedule pods.

From this stage, you can deploy self-managed node groups using the Amazon EKS local cluster.

Pricing and Availability

Amazon EKS local clusters are charged at the same price as traditional EKS clusters. It starts at $0.10/hour. The EC2 instances required to deploy the Kubernetes control plane and nodes on Outposts are included in the price of the Outposts. As usual, the pricing page has the details.

Amazon EKS local clusters are available in all AWS Regions where Outposts is available.

Go build and create your first EKS local cluster today!

— seb and Donnie.