Today, I am happy to announce that the Mistral Small foundation model (FM) from Mistral AI is now generally available in Amazon Bedrock. This a fast-follow to our recent announcements of Mistral 7B and Mixtral 8x7B in March, and Mistral Large in April. You can now access four high-performing models from Mistral AI in Amazon Bedrock including Mistral Small, Mistral Large, Mistral 7B, and Mixtral 8x7B, further expanding model choice.

Mistral Small, developed by Mistral AI, is a highly efficient large language model (LLM) optimized for high-volume, low-latency language-based tasks. Mistral Small is perfectly suited for straightforward tasks that can be performed in bulk, such as classification, customer support, or text generation. It provides outstanding performance at a cost-effective price point.

Some key features of Mistral Small you need to know about:

- Retrieval-Augmented Generation (RAG) specialization – Mistral Small ensures that important information is retained even in long context windows, which can extend up to 32K tokens.

- Coding proficiency – Mistral Small excels in code generation, review, and commenting, supporting major coding languages.

- Multilingual capability – Mistral Small delivers top-tier performance in French, German, Spanish, and Italian, in addition to English. It also supports dozens of other languages.

Getting started with Mistral Small

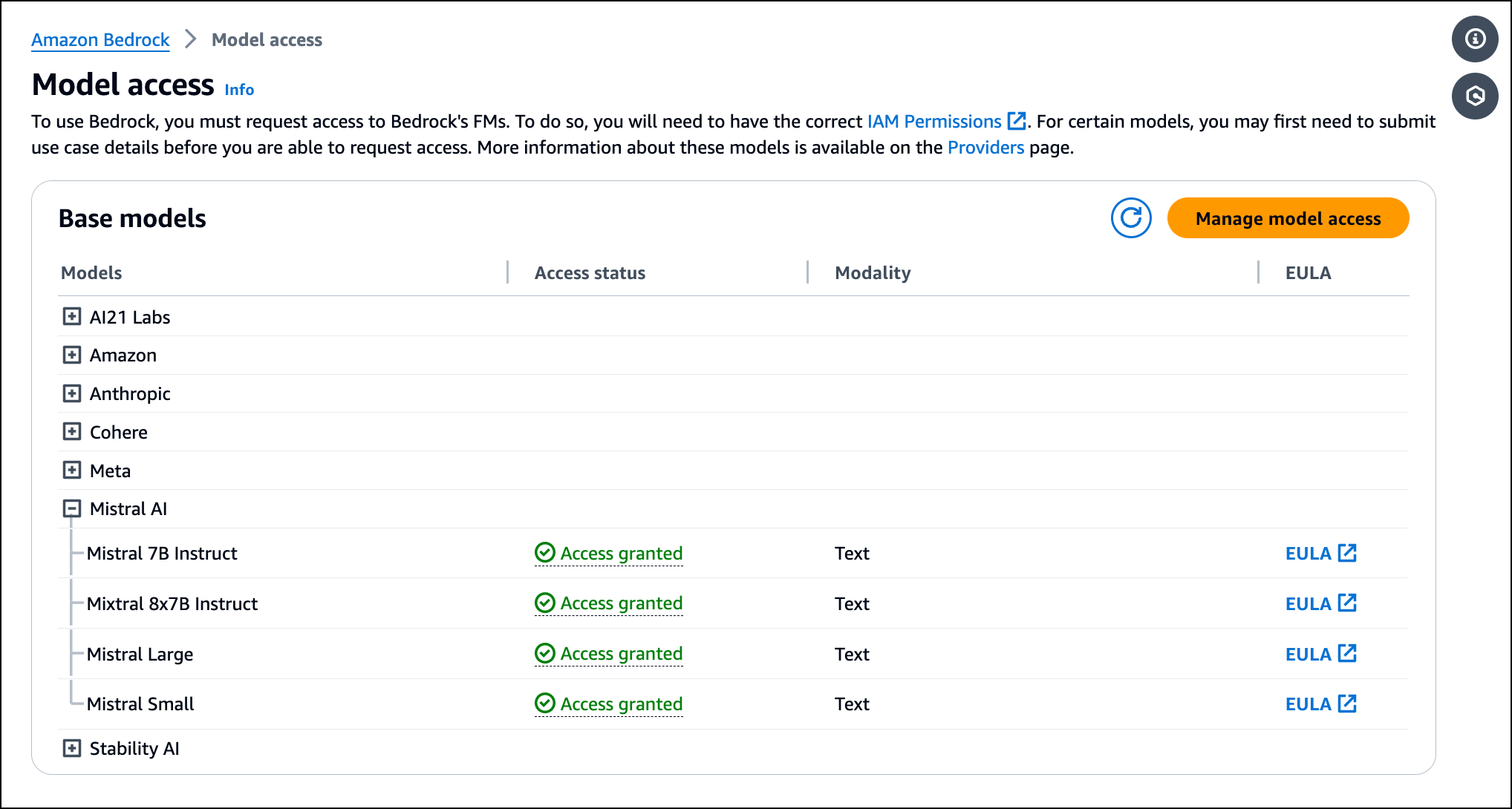

I first need access to the model to get started with Mistral Small. I go to the Amazon Bedrock console, choose Model access, and then choose Manage model access. I expand the Mistral AI section, choose Mistral Small, and then choose Save changes.

I now have model access to Mistral Small, and I can start using it in Amazon Bedrock. I refresh the Base models table to view the current status.

I use the following template to build a prompt for the model to get sub-optimal outputs:

<s>[INST] Instruction [/INST]

Note that <s> is a special token for beginning of string (BOS) while [INST] and [/INST] are regular strings.

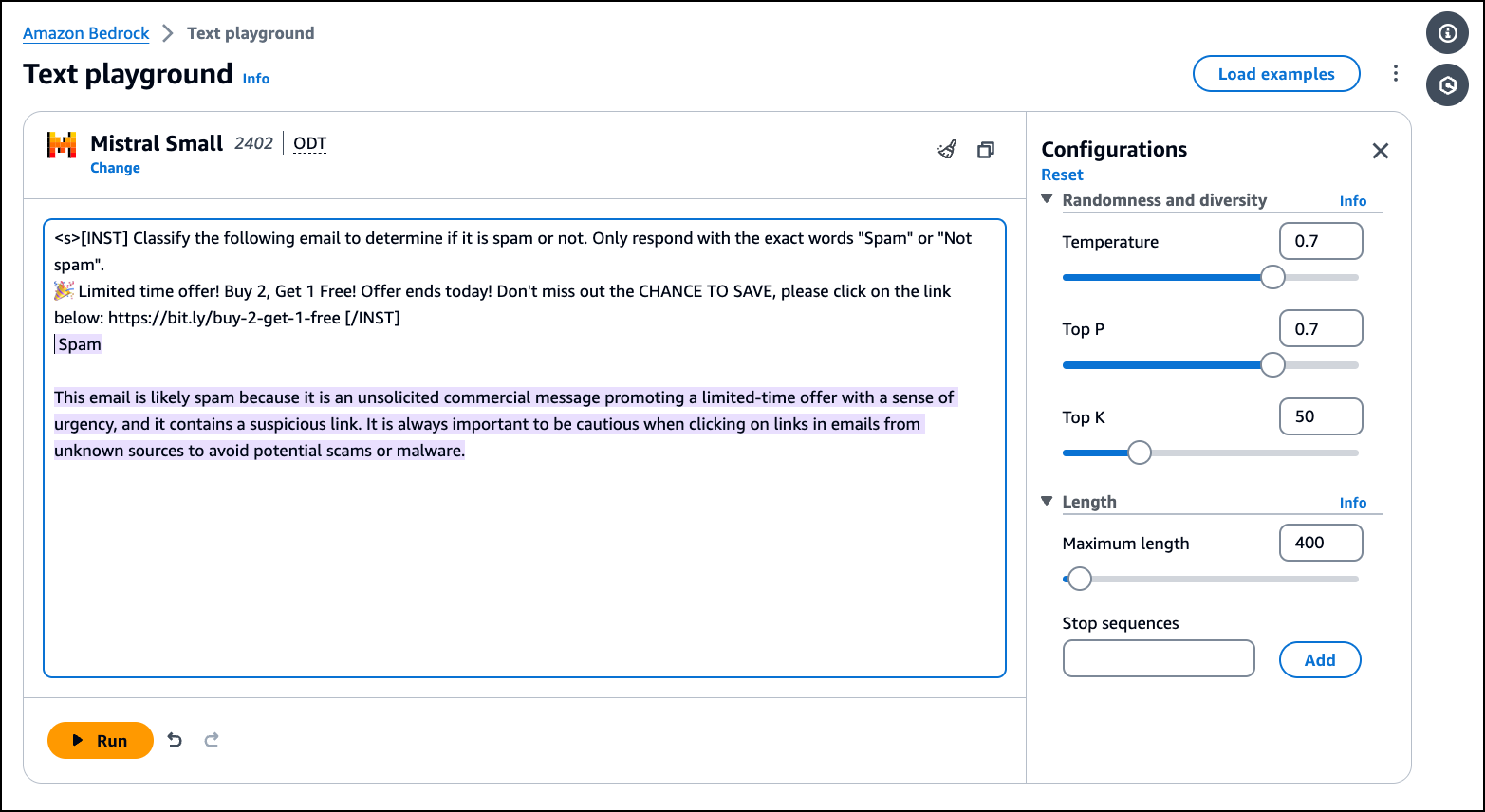

I try the following prompt to see a classification example:

Prompt:

<s>[INST] Classify the following email to determine if it is spam or not. Only respond with the exact words "Spam" or "Not spam".

🎉 Limited time offer! Buy 2, Get 1 Free! Offer ends today! Don't miss out the CHANCE TO SAVE, please click on the link below: https://bit.ly/buy-2-get-1-free [/INST]

Mistral 7B, Mixtral 8x7B, and Mistral Large can all correctly classify this email as “Spam.” Mistral Small is also able to classify this accurately, just as the larger models can. I also try several similar tasks, such as generating a Bash script from a text prompt and generating a recipe to prepare yoghurt, and get good results. For this reason, Mistral Small is the most cost-effective and efficient option of the Mistral AI models in Amazon Bedrock for such tasks.

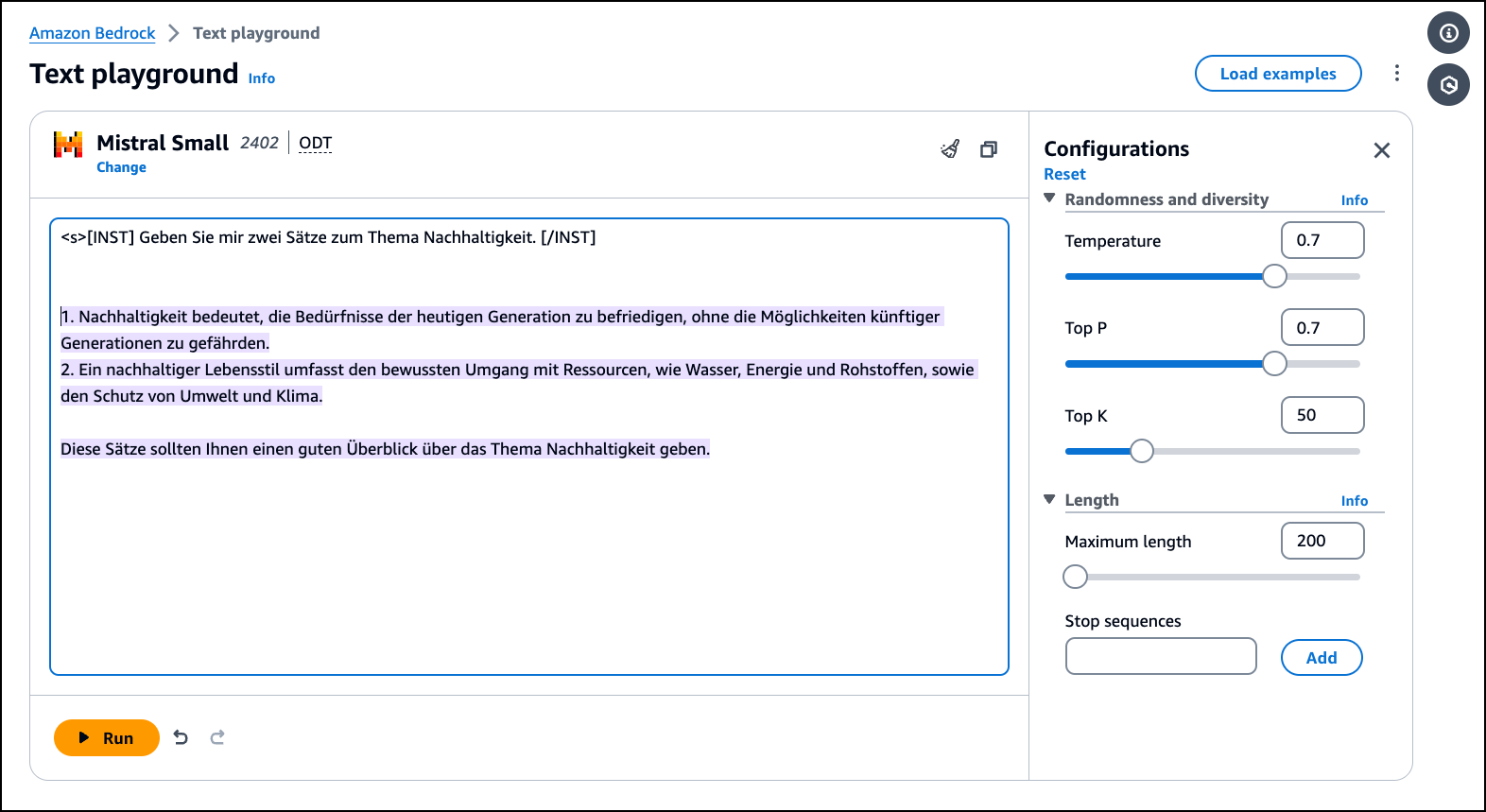

Mistral Small excels in multilingual tasks. Along with English, it delivers superior performance in French, German, Spanish, and Italian. I test the model’s understanding of the German language by asking it to give me two sentences about sustainability:

Prompt:

<s>[INST] Geben Sie mir zwei Sätze zum Thema Nachhaltigkeit. [/INST]

Output:

1. Nachhaltigkeit bedeutet, die Bedürfnisse der heutigen Generation zu befriedigen, ohne die Möglichkeiten künftiger Generationen zu gefährden.

2. Ein nachhaltiger Lebensstil umfasst den bewussten Umgang mit Ressourcen, wie Wasser, Energie und Rohstoffen, sowie den Schutz von Umwelt und Klima.

Diese Sätze sollten Ihnen einen guten Überblick über das Thema Nachhaltigkeit geben.

Programmatically interact with Mistral Small

I can use AWS Command Line Interface (AWS CLI) and AWS Software Development Kit (SDK) to programmatically interact with Mistral Small using Amazon Bedrock APIs. I use the following code in Python, which interacts with Amazon Bedrock Runtime APIs with AWS SDK, asking, “What is the color of the sky?”:

import argparse

import boto3

from botocore.exceptions import ClientError

import json

accept = "application/json"

content_type = "application/json"

def invoke_model(model_id, input_data, region, streaming):

client = boto3.client('bedrock-runtime', region_name=region)

try:

if streaming:

response = client.invoke_model_with_response_stream(body=input_data, modelId=model_id, accept=accept, contentType=content_type)

else:

response = client.invoke_model(body=input_data, modelId=model_id, accept=accept, contentType=content_type)

status_code = response['ResponseMetadata']['HTTPStatusCode']

print(json.loads(response.get('body').read()))

except ClientError as e:

print(e)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Bedrock Testing Tool")

parser.add_argument("--prompt", type=str, help="prompt to use", default="Hello")

parser.add_argument("--max-tokens", type=int, default=64)

parser.add_argument("--streaming", choices=["true", "false"], help="whether to stream or not", default="false")

args = parser.parse_args()

streaming = False

if args.streaming == "true":

streaming = True

input_data = json.dumps({

"prompt": f"<s>[INST]{args.prompt}[/INST]",

"max_tokens": args.max_tokens

})

invoke_model(model_id="mistral.mistral-small-2402-v1:0", input_data=input_data, region="us-east-1", streaming=streaming)I get the following output:

{'outputs': [{'text': ' The color of the sky can vary depending on the time of day, weather,', 'stop_reason': 'length'}]}Now available

The Mistral Small model is now available in Amazon Bedrock in the US East (N. Virginia) Region.

To learn more, visit the Mistral AI in Amazon Bedrock product page. For pricing details, review the Amazon Bedrock pricing page.

To get started with Mistral Small in Amazon Bedrock, visit the Amazon Bedrock console and Amazon Bedrock User Guide.